Redefining Web Development: The Unbelievable Power of ChatGPT in a NextJS + React App

Moved from Medium. Original Post Date: April 10th 2023.

If anything, this post represents in detail the OpenAI GPT 4 capabilities as of April 2023, a good reminder how far we've come along.In this article, we’re diving headfirst into the remarkable potential of OpenAI’s ChatGPT and exploring how effortlessly it can be leveraged in a NextJS and React app. I am hoping you’ll find valuable insights into the development process, including the time required to code various components and the simplicity of incorporating ChatGPT into modern web applications.

Interestingly enough, I managed to squeeze in this project during my 10-day trip to Portugal, juggling multiple commitments, such as:

Supporting my 12-year-old son as he joins Washington’s Soccer EPD team in the Iber Cup held in Portugal.

Tackling end-of-quarter work responsibilities, attending three meetings totaling four hours, and dedicating around six hours to focused work.

Spending quality time with my wife as we discover the enchanting city of Lisbon. Reuniting with my childhood friend, Abbass, after 12 long years. He traveled from Germany to spend two memorable days together with our families.

Despite the packed schedule, I was determined to harness the power of ChatGPT and bring a new feature to life before returning to the daily grind in Seattle, WA. So, join me as I share my experience of balancing work, family, and cutting-edge web development on this unforgettable Portuguese adventure.

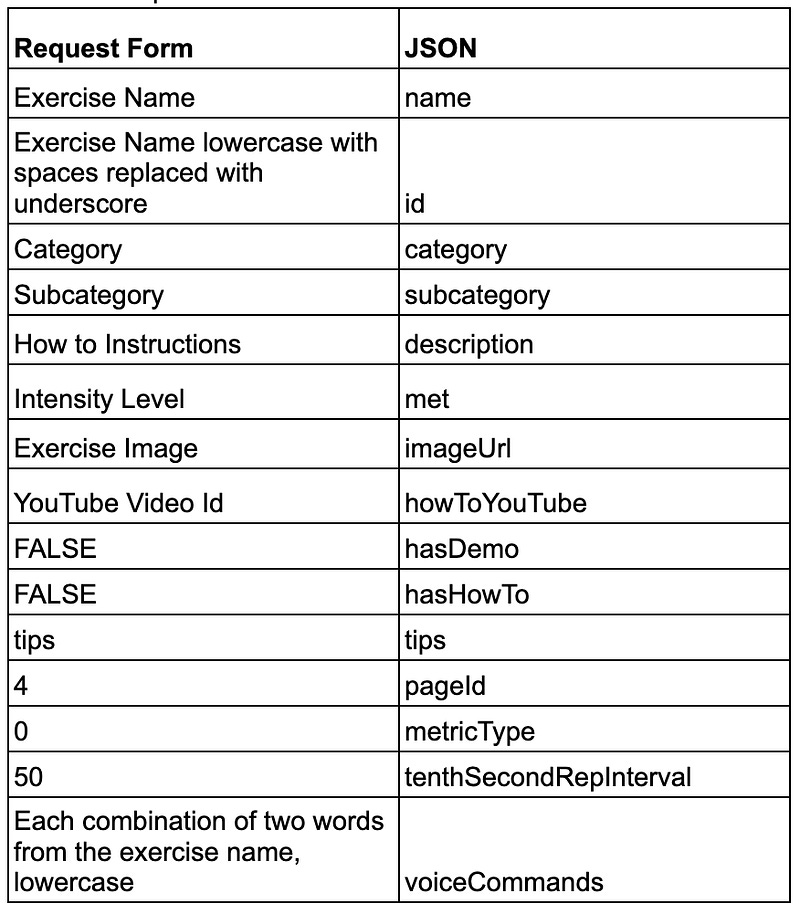

Legend

💬 for working on this article and writing code with the help of ChatGPT.

⚽ for soccer-related activities.

🫶 for fun and quality time. You’ll notice I won’t be sharing out any details on this front, just to keep this article’s size manageable.

👔 updates related to spending time doing work for my employer.

Disclaimer: While I don’t identify myself as a web developer per se, my background as a Software Engineering Manager and a generalist has equipped me with a passion for learning new technologies — especially when they can be used to achieve beneficial outcomes for others. So, regardless of your expertise level, I hope you’ll find valuable takeaways from my experience as I dabble in the world of web development with ChatGPT, NextJS, and React. Happy reading!

Problem Statement

Over the past few years, I have dedicated 2–12 hours per week to develop a mobile app, Handstand Quest, aimed at helping individuals learn or improve their handstand skills. Four months ago, I decided to expand the project by creating a complementary web application using NextJS and React to enhance my React development expertise.

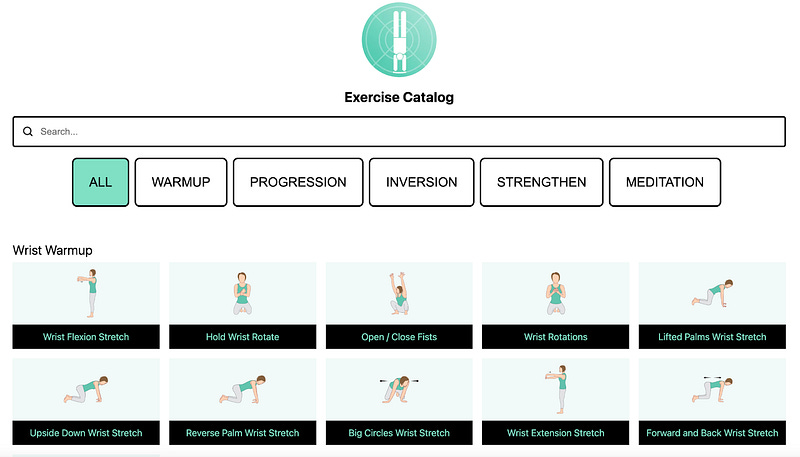

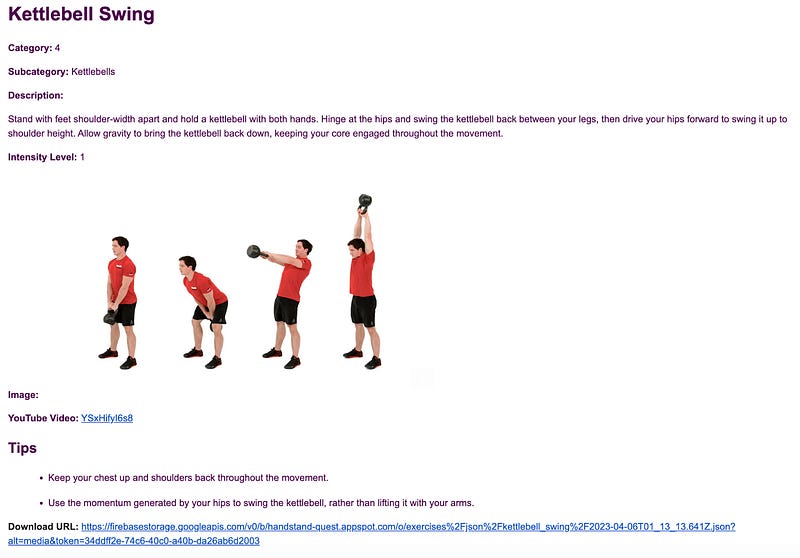

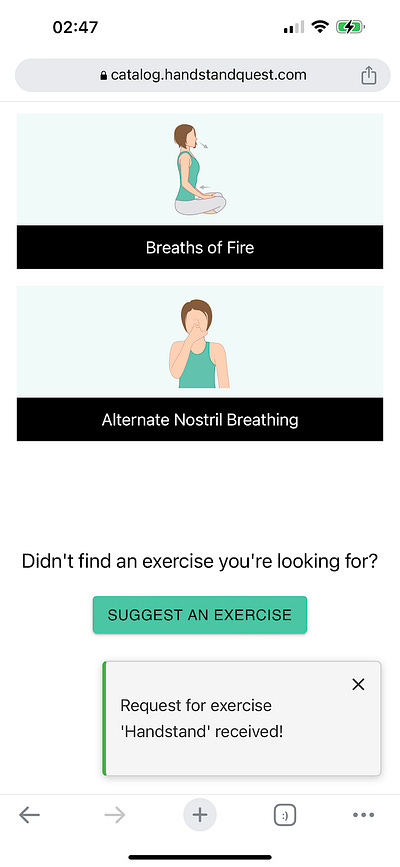

The existing web application, available at https://catalog.handstandquest.com, displays a catalog of exercises, allowing users to access individual exercise pages for detailed instructions and YouTube videos.

To improve the user experience and encourage engagement, I seek to implement a straightforward and efficient mechanism at the bottom of the exercise list for users to suggest new exercises with minimal effort. This feature aims to keep the catalog fresh, comprehensive, and tailored to the diverse interests and preferences of the user base.

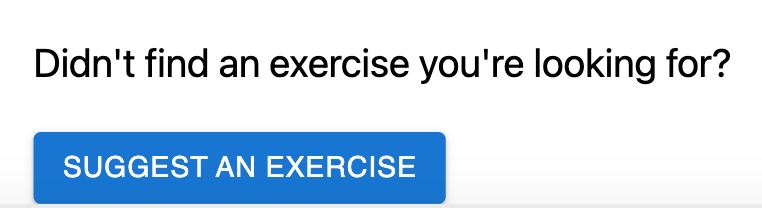

As you reach the bottom of the exercise catalog, you’ll notice an empty space. In this area, I aim to introduce a user-friendly mechanism for suggesting new exercises.

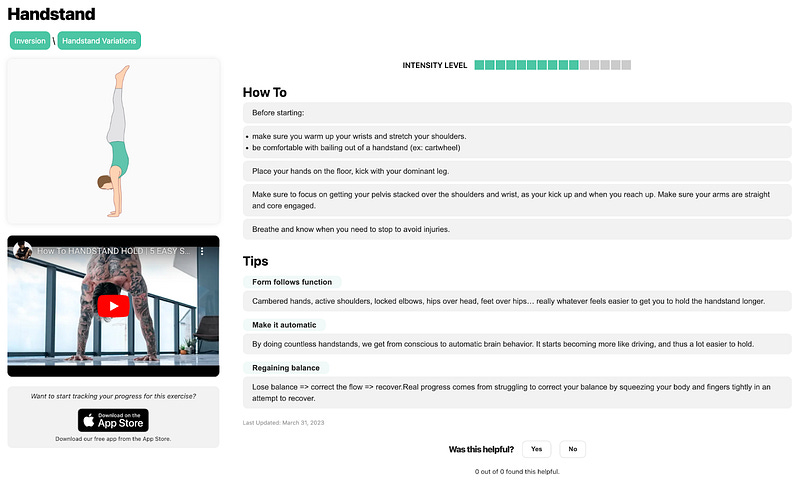

To provide some context on the type of data that would need to be provided to suggest an exercise, let’s take a look at the current design of an exercise page within the application.

I would like to leverage GPT 4 to generate most of the code. I will not be listing all the code generated by ChatGPT. Instead, I will show the progression of the user interface and mention the specific prompts or tweaks to the code that I’ve had to make to get it to work the way I would expect.

Initial Prompt to ChatGPT

I started right away, telling ChatGPT what I want.

On my NextJS React TypeScript app, I would like to introduce a component that does the following:

- Asks the user, “Didn’t find an exercise you’re looking for?”. Place under that a button for the user to “Suggest an exercise”

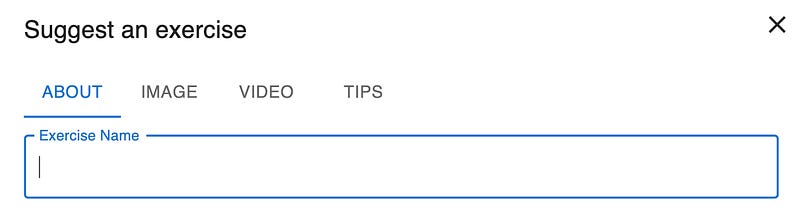

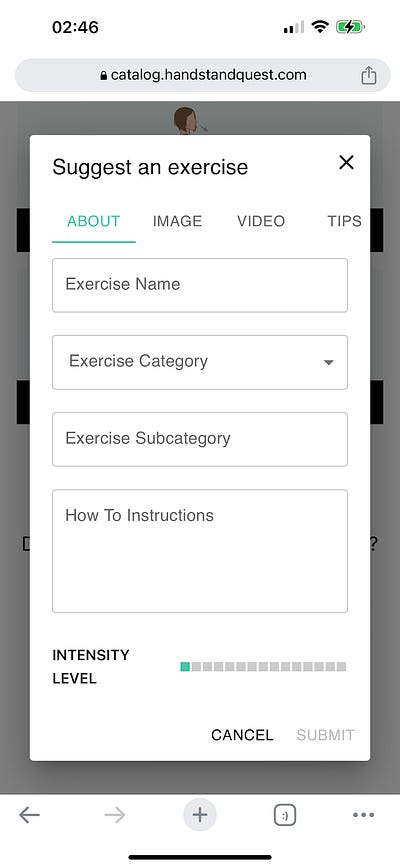

- When the button is clicked, a very user friendly dialog opens up and it lets the user specify exercise information and submit or cancel. The dialog also has a close button in the top right corner.

- The exercise info consist of the following: Exercise name, Exercise category selected from a dropdown, Exercise subcategory, How To Instructions, One or more tips each having a title and a body, an image that could be uploaded or referenced via a link or looked up from a free online image library, a video that can be looked up from YouTube, and an intensity level that can range from 1 to 15.

- I would like to give the user an option, only after specifying a title, to fully generate the rest of the information by calling the GPTAPI.

At a high level, without the code, here are the steps that ChatGPT asked me to follow:

Install Material-UI and its dependencies, if you haven’t already.

Create a new file named ExerciseSuggestion.tsx and import the necessary dependencies.

Create the ExerciseSuggestion component with the following structure.

Add state variables and handlers for opening and closing the dialog, as well as handling form submission.

Implement the exercise input form inside the DialogContent component.

Add state variables and handlers for the form fields and GPT API call.

Implement the form field change handlers and bind them to the form fields.

Add the exercise category dropdown options and other form fields.

Create the handleTipsChange function for handling the addition and updates of tips.

Implement the tips input form and add the handleTipsChange function.

Implement the inputs for image and video.

Step 1: NPM Install

On running the NPM install on the command line, I got this error.

While resolving: undefined@undefined

Found: react@18.2.0

Could not resolve dependency: peer react@”¹⁶.8.0 || ^17.0.0" from @material-ui/core@4.12.4

I prompted ChatGPT that I got this error on npm install. It gave me options to downgrade React or upgrade to the latest Material-UI version. I chose the latter. Note that it also suggested that if I choose the latter, I need to update my import statements.

Running the new NPM install succeeded.

Steps 2, 3, 4 & 5: ExerciseSuggestion Component

To begin with, I asked it to rewrite this file based on the latest Material-UI version. I think it included steps 2–5 in the generated code.

It gave additional NPM components to install, which executed as expected on the command line. I then created a new file called ExerciseSuggestion.tsx and copied the code from ChatGPT.

It also included the following summary of what the file does:

In this example, I’ve created a basic ExerciseSuggestion component that displays a button and a modal dialog when clicked. The dialog contains input fields for the exercise name, category, subcategory, and how-to instructions. You can extend this component by adding more input fields for tips, image, video, and intensity level as required.

Note that this example assumes you have an array of categories passed as a prop. You can replace this with your own data or fetch it from an API as needed.

New Step: Plugging the Component

I told GPT to include the generated component in a parent component, that has categories in the following format:

exerciseCategories: {

id: ExerciseCategory

categoryName: string

}[]I included the component as it suggested, while ignoring some of the other things it suggested like initializing the categories array.

<ExerciseSuggestion categories={exerciseCategories} />It then recommended that I update the ExerciseSuggestion component to accept the categories prop and map the categories correctly inside the Select component.

So I plugged in the code as it suggested. The only other thing I had to change was the type of the id, from string to my own defined ExerciseCategory enum.

import { ExerciseCategory } from '../lib/exercises'

interface ExerciseSuggestionProps {

categories: { id: ExerciseCategory; categoryName: string }[];

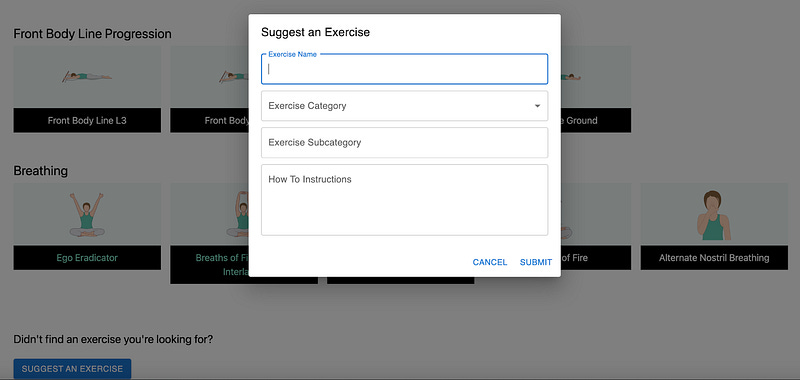

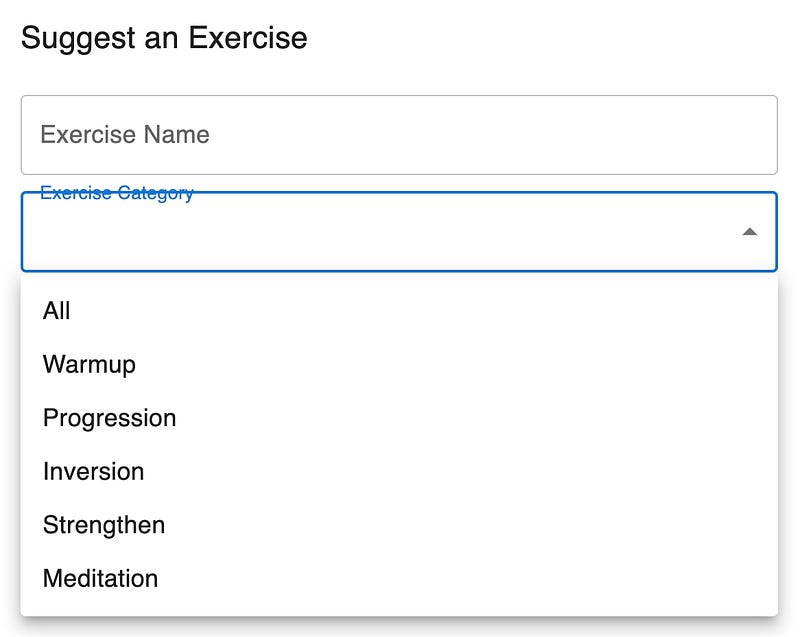

}This is how the app looks like now.

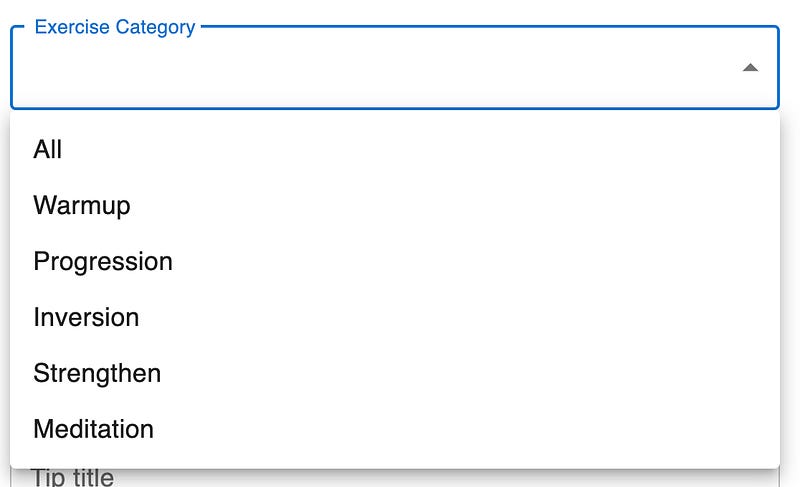

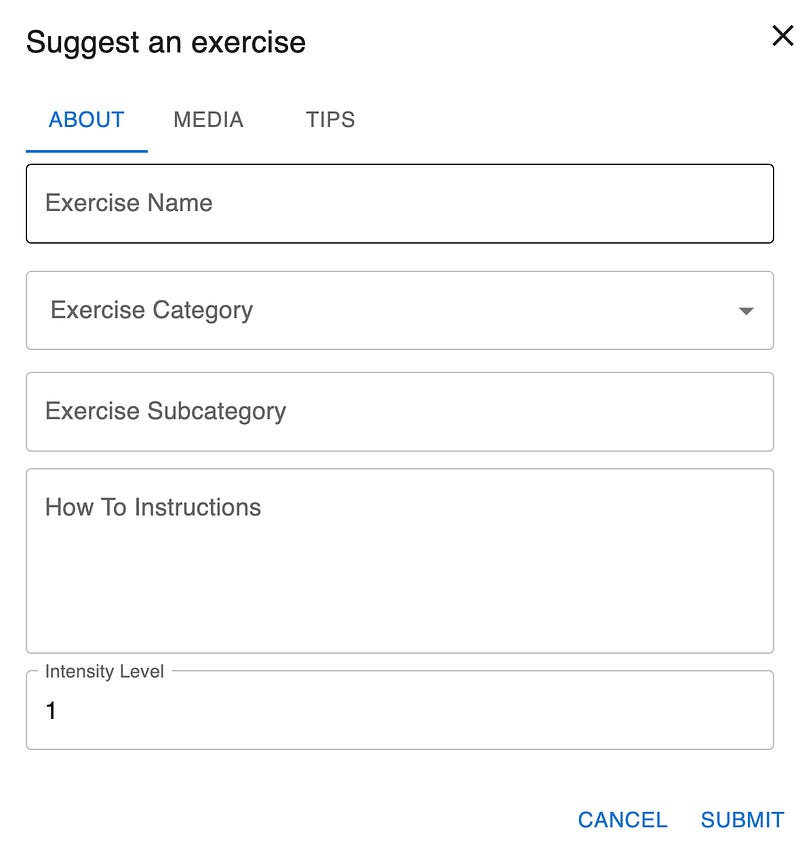

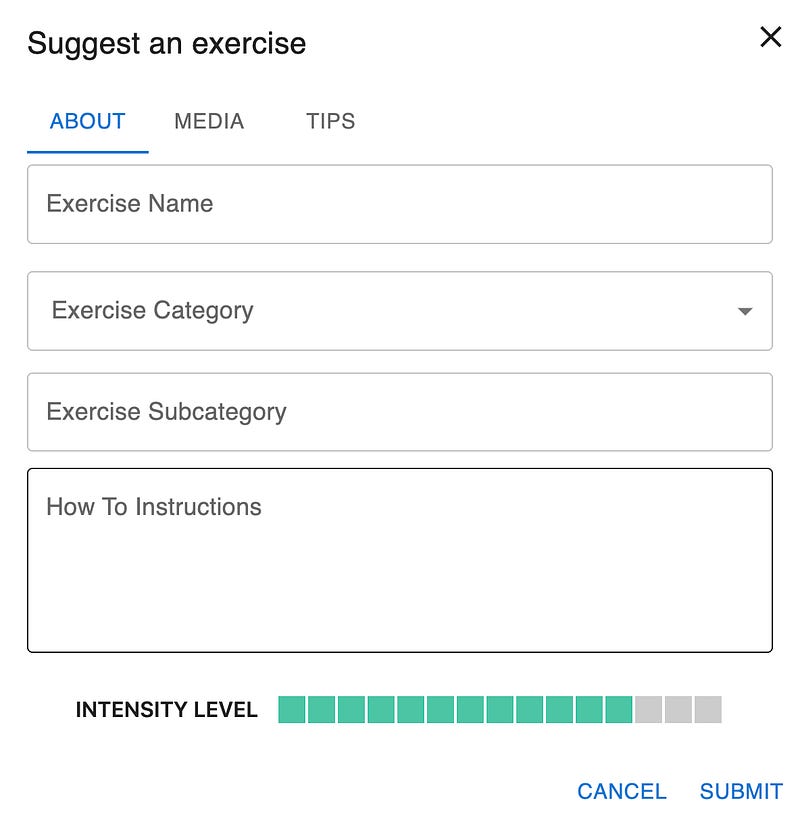

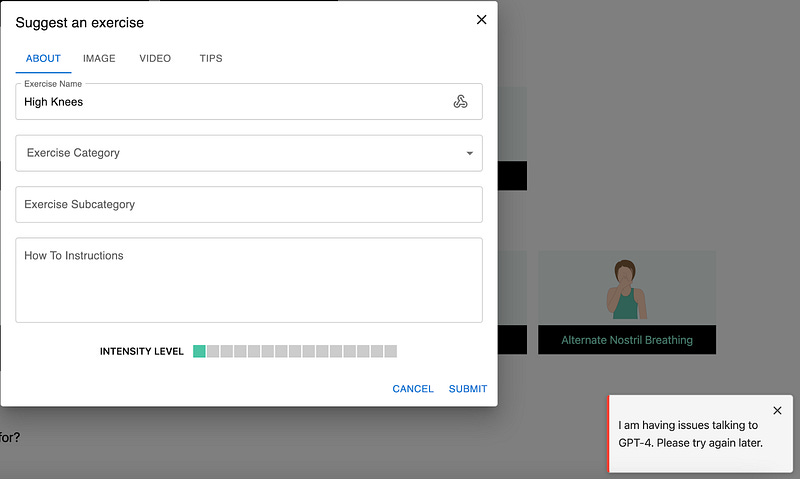

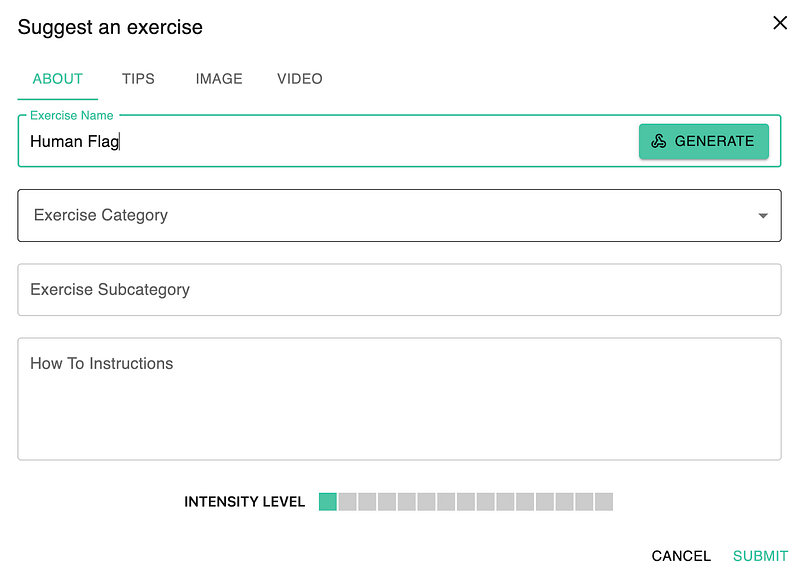

When the “Suggest An Exercise” button is clicked, it opens this dialog.

At this point, I made my first git commit.

Introduce a button to suggest an exercise and open a dialog to fill in basic details

March 30th 💬🫶: It took me about an hour to complete the coding and documentation up to this point. Earlier, I had spent over 3 hours working with GPT to finish the “Was This Helpful” feature visible in the Exercise Details Page screenshot I shared previously. After making good progress, I decided to take a break and pack my bags for my upcoming trip to Portugal before returning to the task later.

Step 6: GPT API Call

It’s now around half an hour past midnight. My brother-in-law and I are enjoying a well-deserved break, engaging in casual conversation and sharing a few laughs, all while watching some of his favorite shows. Amidst this relaxing atmosphere, I have my laptop in front of me, working on the project. Juggling a full-time job, I’ve learned to seize moments like these to “paint with code” and make progress on my passion projects.

I’ll skip this step for now until I have all the other parts completed.

Step 7: Saving State in React

In this step, I’ll integrate more code into the ExerciseSuggestion component, allowing the form updates to be saved as React properties. This way, they can be accessed later for tasks such as storing data in the backend or sending an email notification with the collected information.

After plugging in the code, I encountered an error. I shared the error with ChatGPT, and in response, I received an updated code solution to address the issue.

I apologize for the confusion. It looks like the type of the event in the handleCategoryChange function is not compatible with the onChange event of the Material-UI Select component.

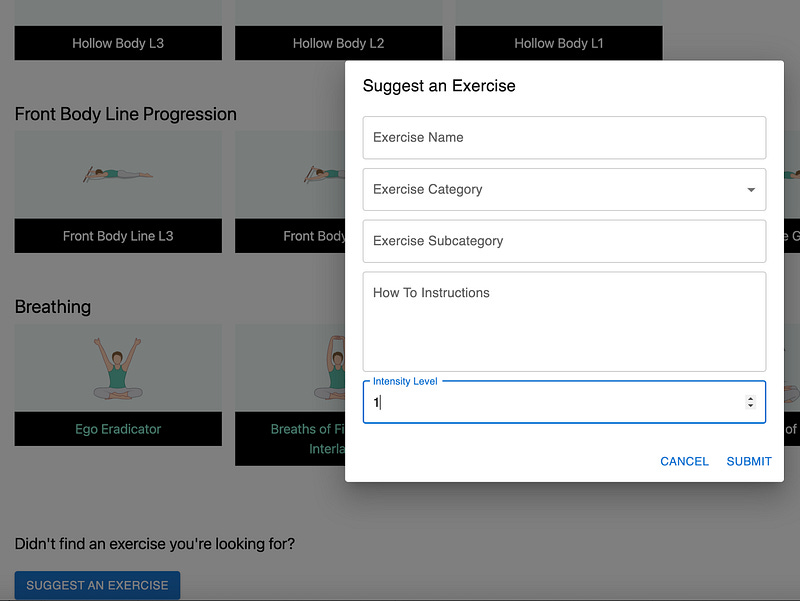

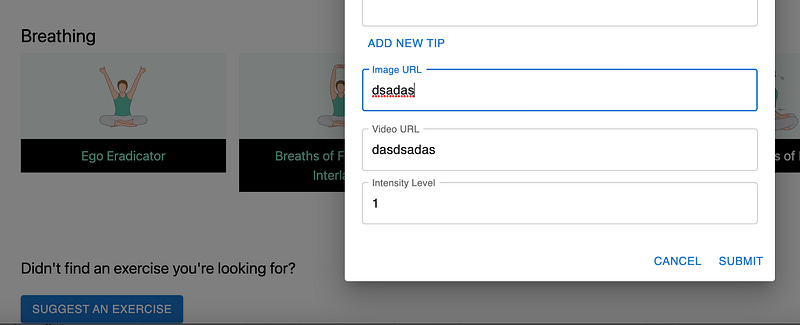

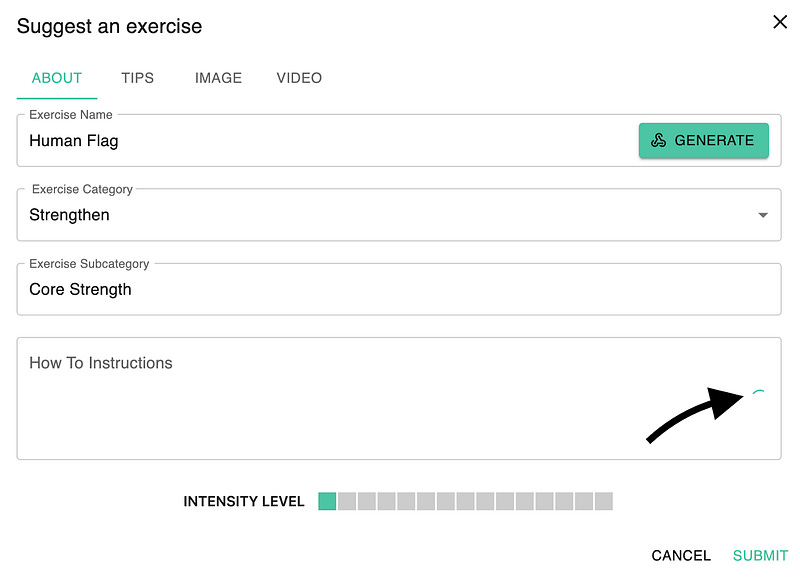

I proceeded to update the handleCategoryChange function with the new code, and thankfully, no errors occurred. Below is a screenshot showcasing the recent modifications.

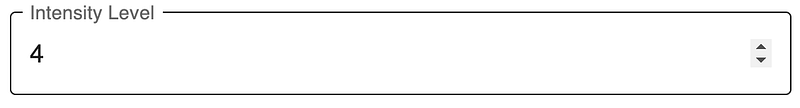

Interestingly, this time, ChatGPT included the code to display the “Intensity Level” field — a numeric value — along with the appropriate UI component to modify and maintain it as a number.

Step 8: Add Exercise Category Problem

That has been already added in previously generated code, so skipping.

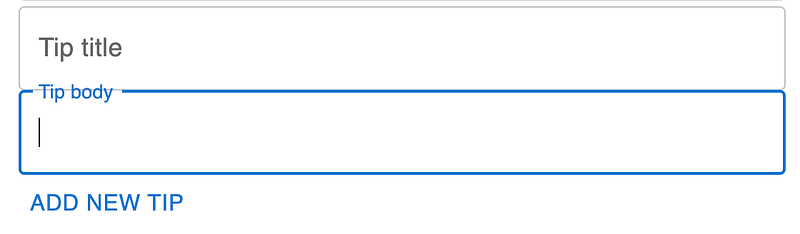

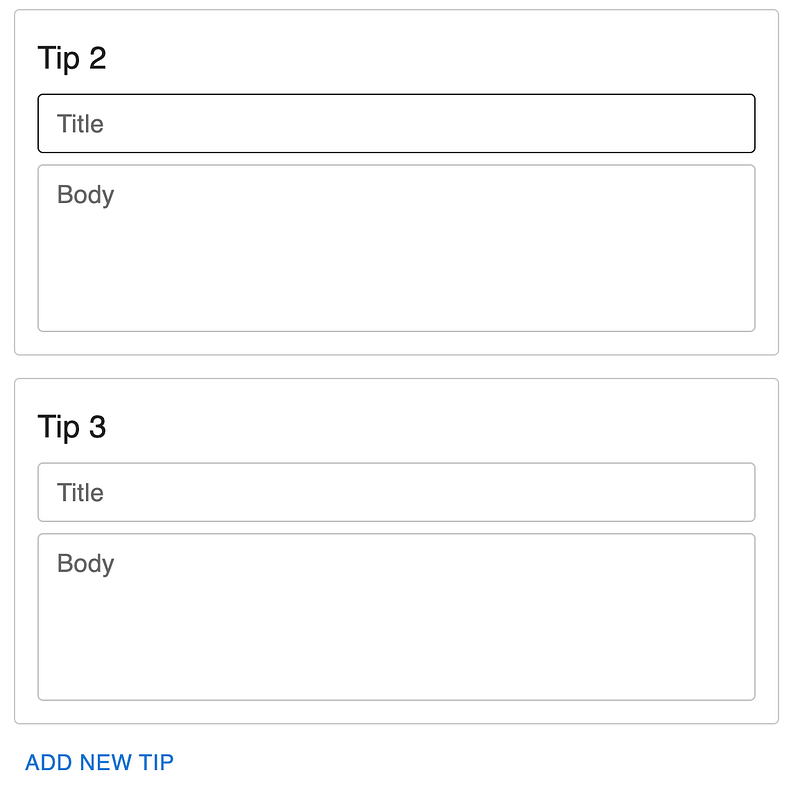

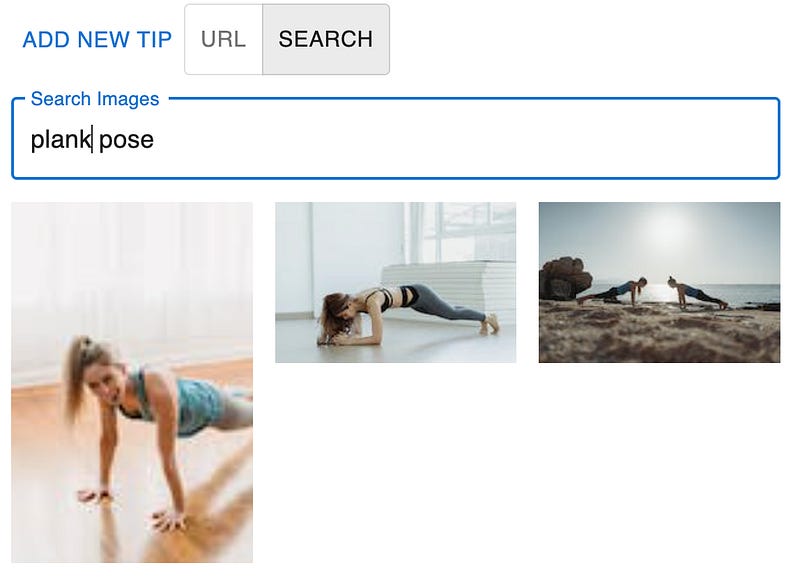

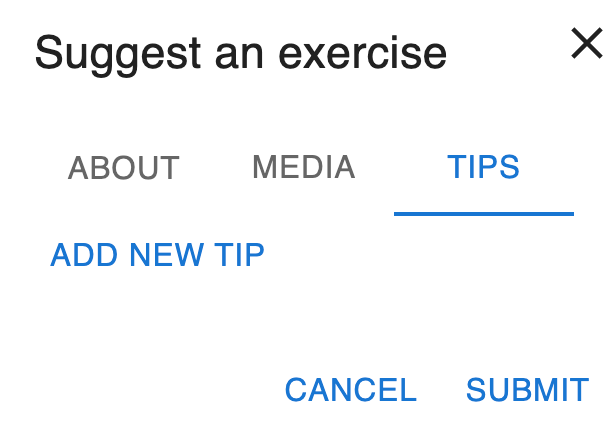

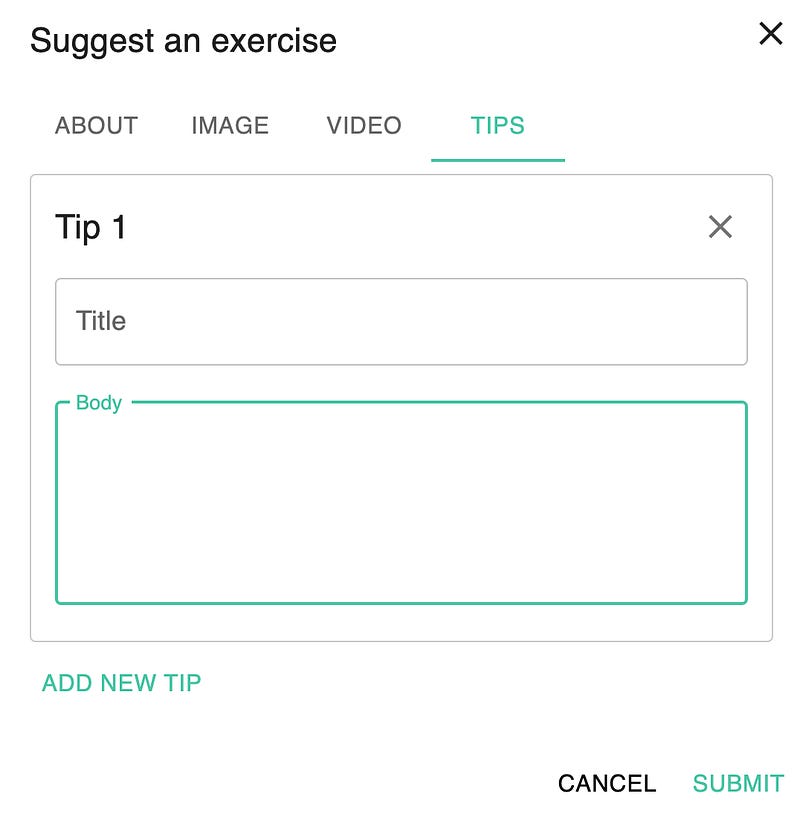

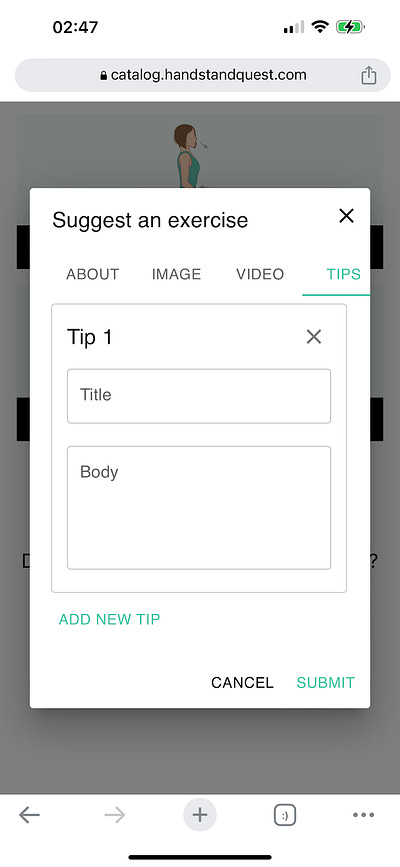

Steps 9 & 10: Add Tips

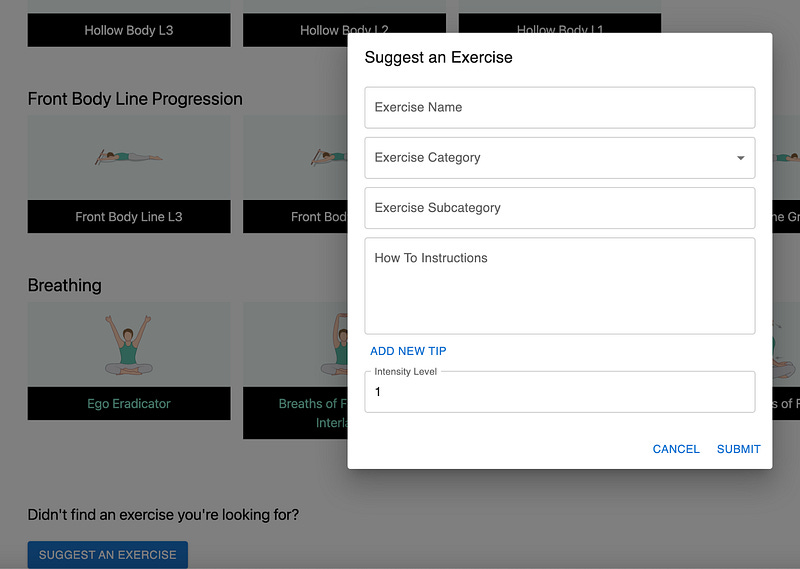

I plugged in the code for tips, which added the JSX for this component, along with the JavaScript to store state and handle adding new tips.

Now, it shows an “Add Tip” button in the form.

Upon clicking the “Add New Tip” button, the title and body fields for adding a tip are displayed, allowing you to add one or more tips. The positive aspect is that the tips fields were now automatically “connected” to React state variables.

Although the current user interface isn’t visually appealing, we plan to address that later.

Step 11: Image and Video

I revisited this step, and since the code appeared incomplete, I requested ChatGPT to provide the code for the image and video. However, I received the same response, so I decided to plug in the code anyway.

As a result, it merely added the text fields without URL validation. Ideally, I’d prefer a more user-friendly experience. I plan to address this issue later, but for now, I’ll git commit the progress made so far.

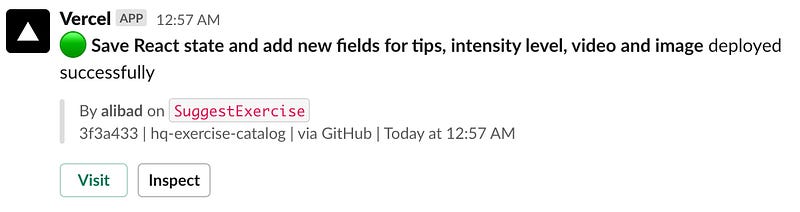

Save React state and add new fields for tips, intensity level, video and image

I pushed the git branch, and thanks to Vercel, I got a Slack notification that the deployment to the staging environment succeeded. I clicked on the “Visit” button in Slack to manually confirm all the new changes in the staging environment.

Refining The User Experience

Having completed the initial steps recommended by ChatGPT, it’s time for me to take a step back and evaluate the current user experience to determine my next course of action.

To stay focused on what’s essential, I revisit my original requirements. I’ve resolved to prioritize a solid user experience before diving into leveraging GPT for generating exercise content. While the excitement may have diminished slightly, I remain committed to achieving the desired outcome, as witnessing the final result will be rewarding in itself!

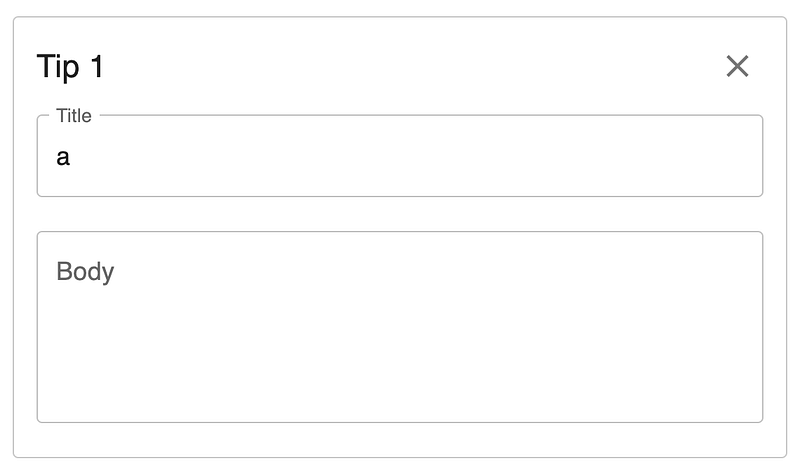

Category Field Title

I’ll start with the little things. Looking at the UI, when the user tries to pick a category, the field title shows with the field border striking through it.

I also see the same behavior in the tip body and title.

So I ask ChatGPT.

When the exercise category field is in focus mode, the border of the field is striking through the title.

ChatGPT gave me back the code to add a background color to the label (field title) and some padding, so it does not overlap with the border when the input is focused. Although it’s not what I was thinking exactly, I decided to give it a try and see what it looks like.

The code gave some errors, related to deprecated code and a div referencing a CSS file. It dawned on me that I haven’t needed a single css change so far!

March 31st 🫶: Anyways, I’m tired right now, and I am going to bed. I’ll come back to this as soon as life lets me.

March 31st 💬: Next morning, I have 30 minutes before Uber shows up for the ride to the airport. Let’s see if I can get this done.

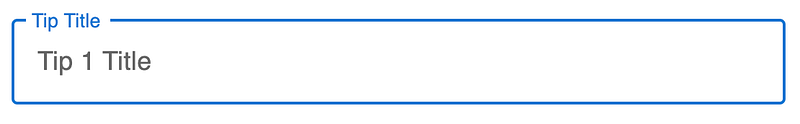

One minute later with minor tweaks, I got the code running and I am actually happy with the results.

Tips Title and Body Fields

I need to do the same for the tip body and description. So I asked ChatGPT, and it gave me the code and told me that the code will apply the required styles to the title and body fields of tips, ensuring that the border does not strike through the label.

There was an error because it decided to introduce a change handler for each of the tip title and body, when in the past it had one change handler for both. I fed it the error and it gave me the two new functions to plug in. there was another error, which I fed back in and now it gave me the correct code.

Ok, so the field now renders nicely when it is focused.

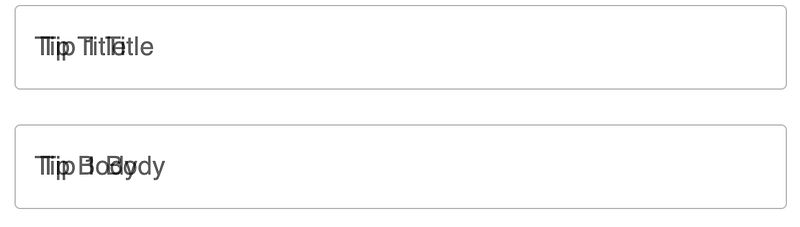

However, it’s broken when the text fields have text in it.

Well, in this case, I feel like ChatGPT complicated my life a bit and made me a bit dumber. What I need to do is figure out whether I should continue down the path.

I’m choosing to just remove all the new code and get back to the original state, then just tell ChatGPT to add some vertical padding to the tip title and body.

Now, I am paying a bit more attention (learning mode) and I asked ChatGPT what the syntax is all about.

The sx prop is a shorthand property provided by Material-UI to apply styles to a component. It allows you to define style rules using an object syntax, where keys correspond to CSS properties, and values represent the styles to apply.

In this case, sx={{ mt: 2, mb: 1 }} is setting the top margin (mt) to 2 units and the bottom margin (mb) to 1 unit. Material-UI uses an 8px spacing unit by default, so 2 units will correspond to 16px (2 * 8px) and 1 unit will correspond to 8px (1 * 8px).

The sx prop can be used to apply various styles, such as padding, margin, color, and more, in a concise and convenient way.

So all I did was just add the sx property to each of the two fields (title and body). Now, I have a fix to the strikethrough issue!

Time for a git commit:

Fix issue where border of field strikes through its title for categories and tips title/body

March 31st 🫶: 10 minutes before Uber is here. Time to get in travel mode.

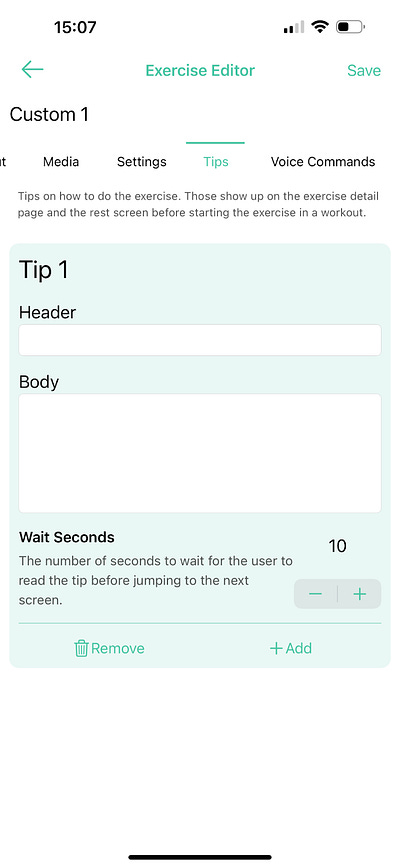

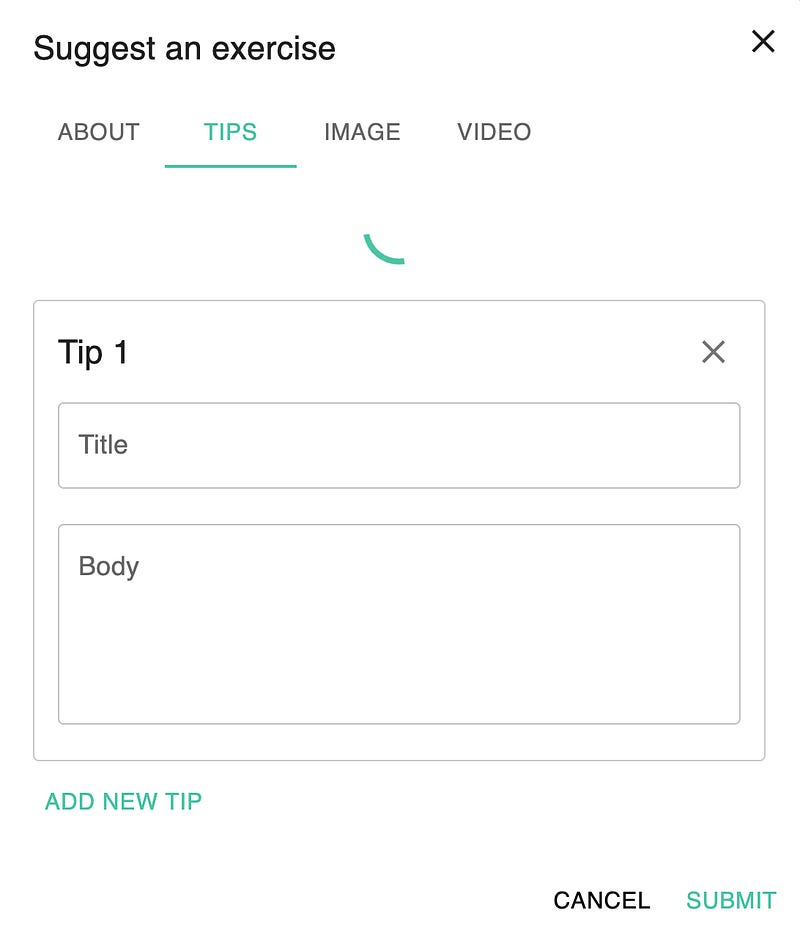

Tips Grouping

March 31st 💬 ⚽: Alright, I am back. It’s 1 pm and I’m at the airport. My flight departs at 6 pm. I’m that early as I had to drop my kid for an earlier flight with his soccer team. Actually, that’s the main reason I am traveling to Portugal. Washington EPD team is practicing with Academia Cristiano Ronaldo — Sporting Clube de Portugal, then participating in the Iber Cup, which is one of the largest Youth Football/ Soccer Tournaments on the planet.

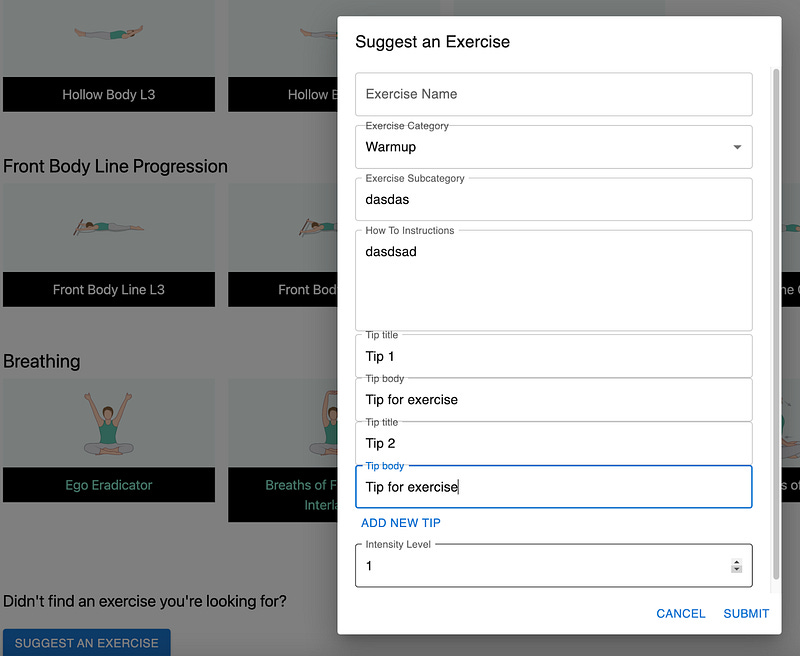

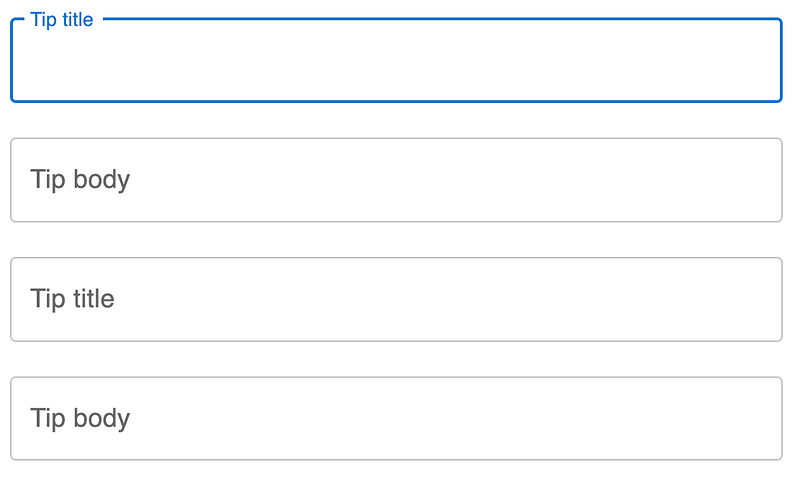

The next quick win in the user experience I want to have is to be able to easily differentiate the list of tips. Right now, it’s confusing.

I want to place the title and body into a Tip box that represents the order of the tip in the tips array. For example, the first tip would have a box with title “Tip 1” and within it, there would be a “Title” field and a “body” field.

I just pasted the paragraph above into ChatGPT and incorporated the provided code, which involved importing a new component from React. Although the code triggered an error, fixing it was a straightforward task.

The updated user interface, as shown below, may not be perfect, but it’s a considerable improvement compared to its previous state. For the time being, I am satisfied with the progress.

I have a bug though. Whenever I type something in the body, it shows it in the title and limits it to one character. Weird.

I pass the exact paragraph as is to ChatGPT and I plug in the changes, problem solved! For some reason, I don’t know why, and for now, I don’t care. I want to move on to building something fun.

Wait, it was actually my error copy/pasting the same handler code parameters for both the title and the body, so it was updating the title when I was updating the body!

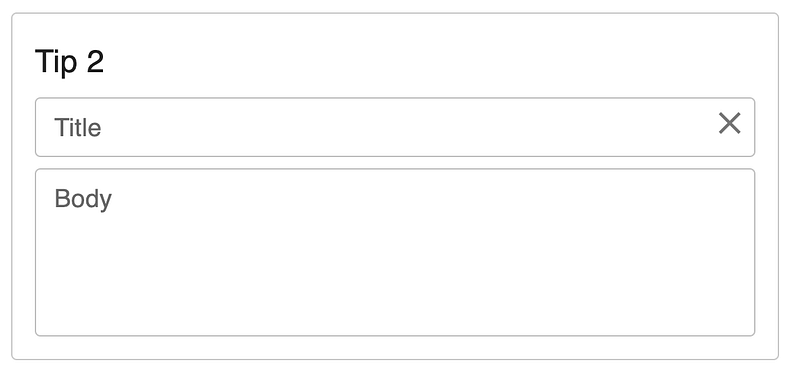

One last thing, I noticed there is no mechanism to remove a tip once it is added, so I’ve asked ChatGPT to add a close button in the top right corner of the tip box that would remove the tip box and the tip item from the array.

I plugged the code in and it added the X on the Title field. I kind of want it on the box itself above the title field. Once more, I’ve resolved that issue by talking with ChatGPT.

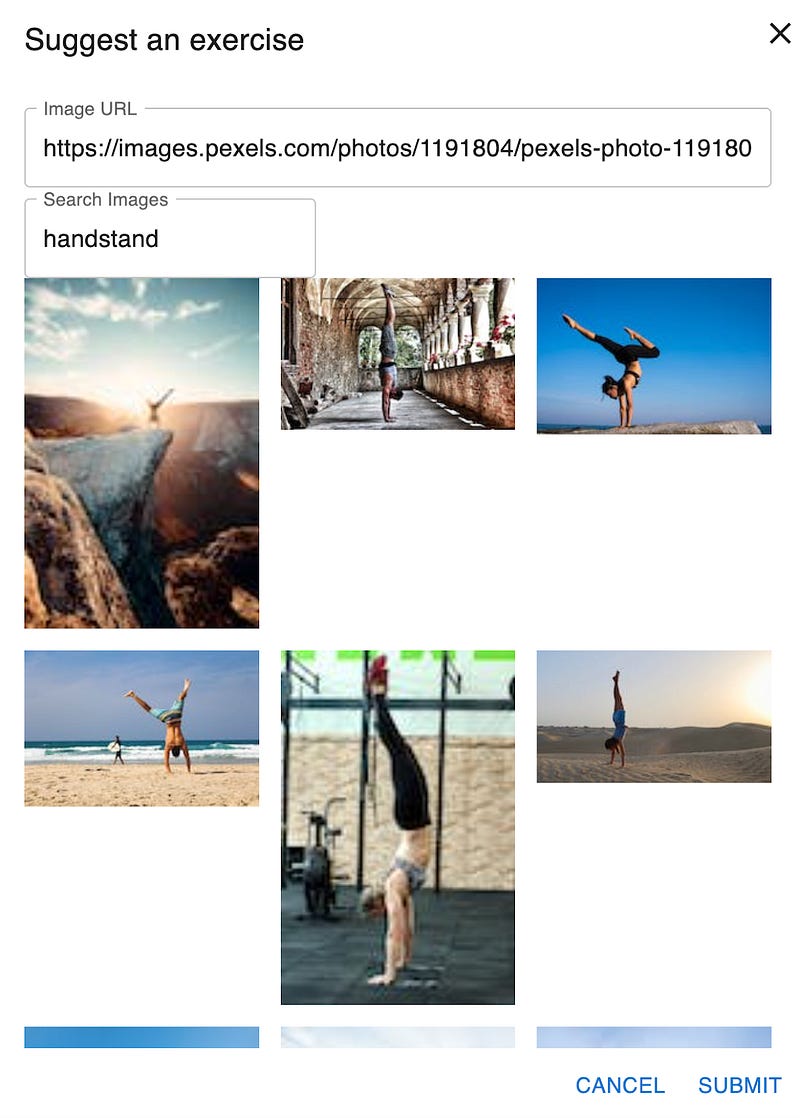

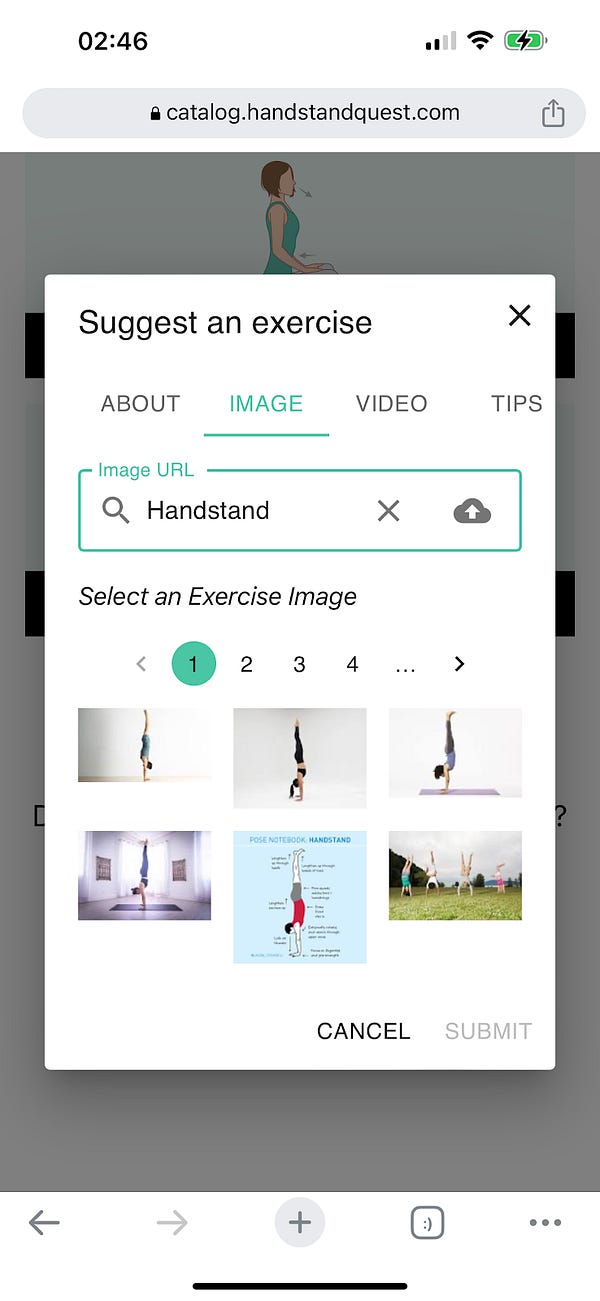

Exercise Image Experience

I’m trying to remember the name of some websites that offer free images, so I just asked ChatGPT.

- Unsplash (

https://unsplash.com/

): Offers high-resolution photos contributed by photographers from around the world. You can use these images for free with proper attribution.

- Pexels (https://www.pexels.com/

): Offers a wide variety of free stock photos and videos, which can be used for personal and commercial projects without attribution.

- Pixabay (https://pixabay.com/

): Provides a large collection of free images, illustrations, vector graphics, and videos under a Creative Commons Zero (CC0) license, which allows for use without attribution.

- Burst (https://burst.shopify.com/

): Powered by Shopify, Burst offers free high-resolution images for entrepreneurs. These images can be used for personal and commercial projects.

- Freepik (https://www.freepik.com/

): Offers free and premium vector graphics, illustrations, and photos. You can use free resources with proper attribution.

Given the above description, although I am very familiar with Unsplash, I had no idea it required attribution! For this reason, I decided to explore Pexels and Pixabay. Hopefully, they are at the same level of quality as Unspalsh.

March 31st 🫶: That said, I need to go give my wife a 5 minute massage, because we had a deal, to take turns massaging each other. Be right back.

March 31st 💬: Ok, I am back at 1:40 pm.

I’ll just go ahead and explore whether there is an easy way to let a user lookup an image from Pexels. Here is my prompt to GPT:

Enable the user to lookup images from Pexels and when the user selects an image, update the value of the Image TextField to the url of the selected image.

Here are the things GPT told me to do. Let’s see how long this will take.

- Get a Pexels API key by signing up for a free account at https://www.pexels.com/api/new/. Make sure to replace “YOUR_PEXELS_API_KEY” with your actual Pexels API key.

- Install Axios, a popular HTTP client for making API requests, if you haven’t already:

- Create a new state variable to store the search results and the selected image URL:

- Create a function to search for images using the Pexels API:

- Create a function to handle selecting an image from the search results:

- Create a UI to search for images and display the results. You can use Material-UI components like TextField, Button, and Grid for this. When a user clicks on an image, call the handleImageSelect function with the image URL.

In about a minute, I generated a Pexels API key. I then npm installed Axios with no issues. I easily plugged in steps 3 and 4 into the code. Steps 4 and 5 didn’t look like they would plugin well into my component, so I explicitly asked ChatGPT again to give me the code as part of the ExerciseSuggestion component.

Can you give me this code as part of the ExerciseSuggestion component

It generated the code for the component, but not all of it, so I had to carefully pick what I wanted to plug in.

It seemed like it updated the dialog to have the top right close button that I initially requested, so I thought I might as well copy that part and see what it looks like. This produced some errors due to referencing new components from new libraries, so I just imported those.

I copied the logic to search images. I made some minor variable and method name changes, along with including new components.

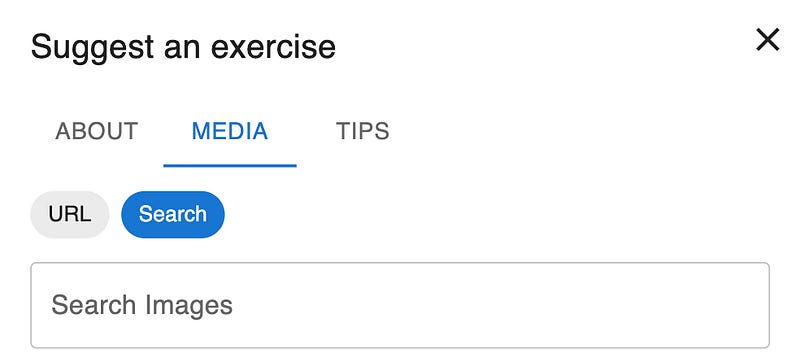

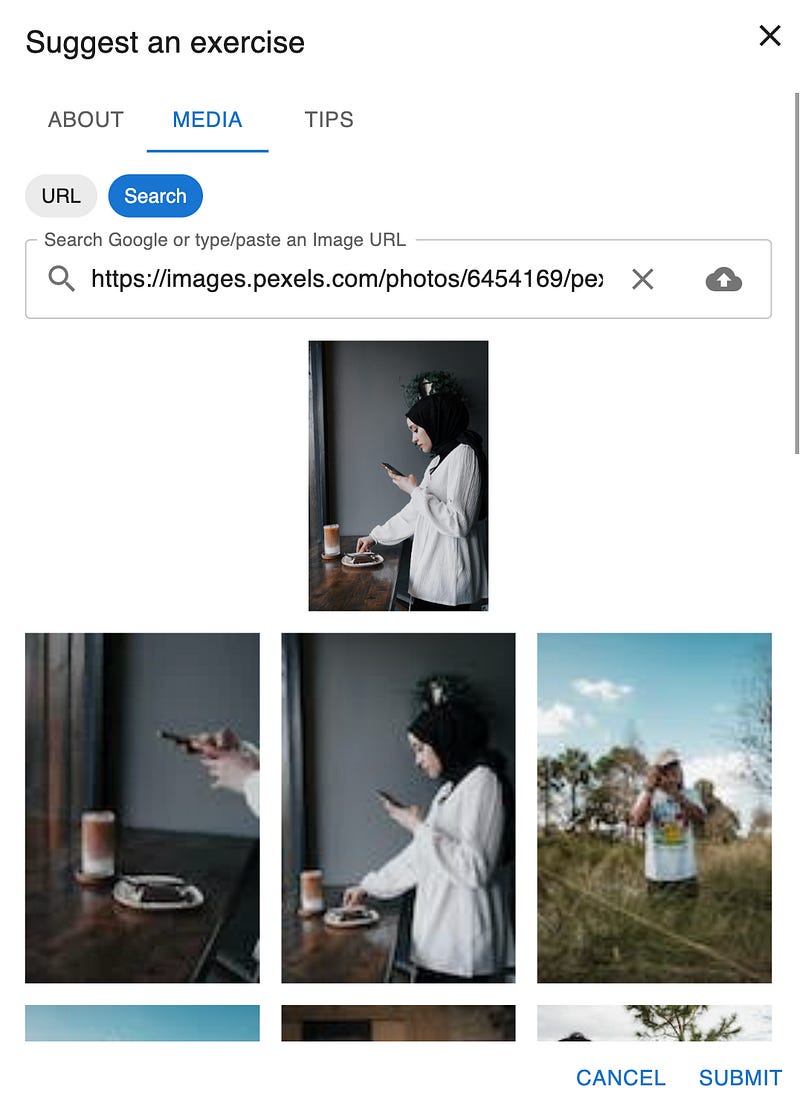

And there you have it — this is what the current UI looks like. Although it functions well, there’s still room for improvement in the overall user experience.

March 31st 💬 🫶: It’s currently 2 o’clock, so it took me around 20 minutes to complete. However, I had some distractions while talking to my wife, so I believe the outcome is still solid. I’m git-committing this change then going for a break.

Enable user to search images from Pexel

Improve Exercise Image Experience

March 31st 💬 🫶: I am back from the break, 4 hours later. I tried to sleep but couldn’t. My flight got delayed by an hour. I don’t care, I am coding with ChatGPT.

Observing the current experience, there a few things I’d like to explore:

It’s confusing to the user that if you search images, they will show up. It’s also not clear that when you click on an image, it will automatically populate the Url field. Also it’s confusing having both the Url and Search fields showing at the same time without additional cues.

Given that I won’t actually end up using the exact images for the exercise, copyrighting is not much of a big deal. So I’m thinking I want to fetch the images via a Google search instead for accuracy.

For the API tokens in the code, I want to make sure those are securely stored in environment variables rather than in the code.

I just dumped the first two bullets above unto ChatGPT to see what I would get.

I got some new code, and it replaced the image search capability with an ImageSearch component. So I asked it to generate the code for that component and plugged everything in. Things are a little better, but not yet what I was looking for.

I asked ChatGPT to replace the ToggleButton with a Chip, and now it looks as follows:

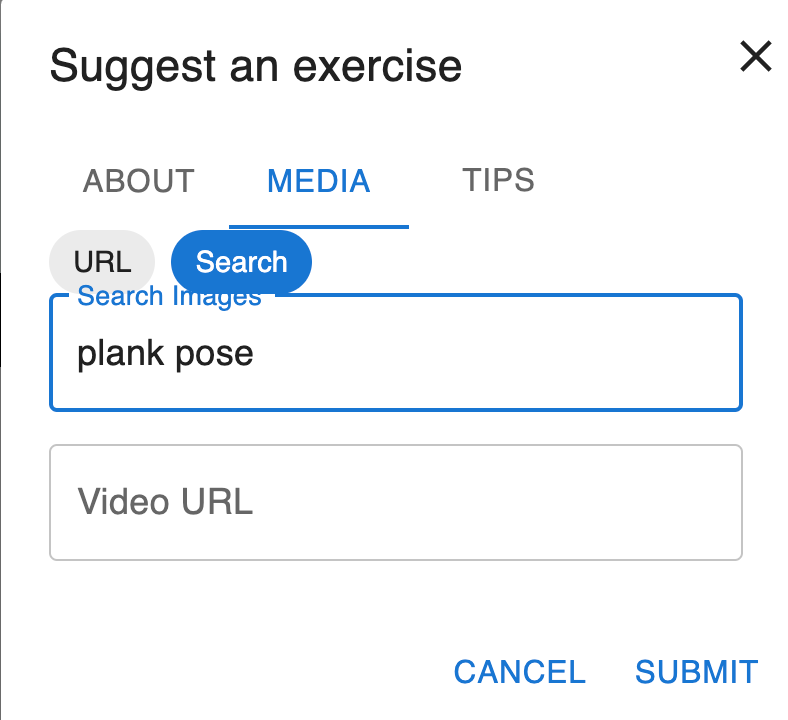

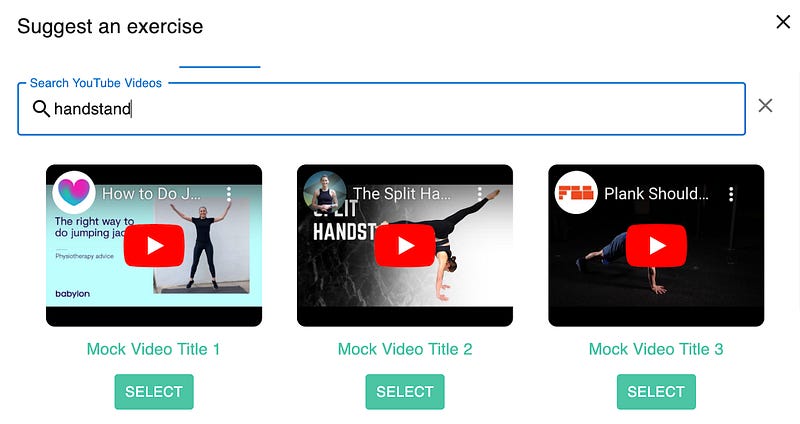

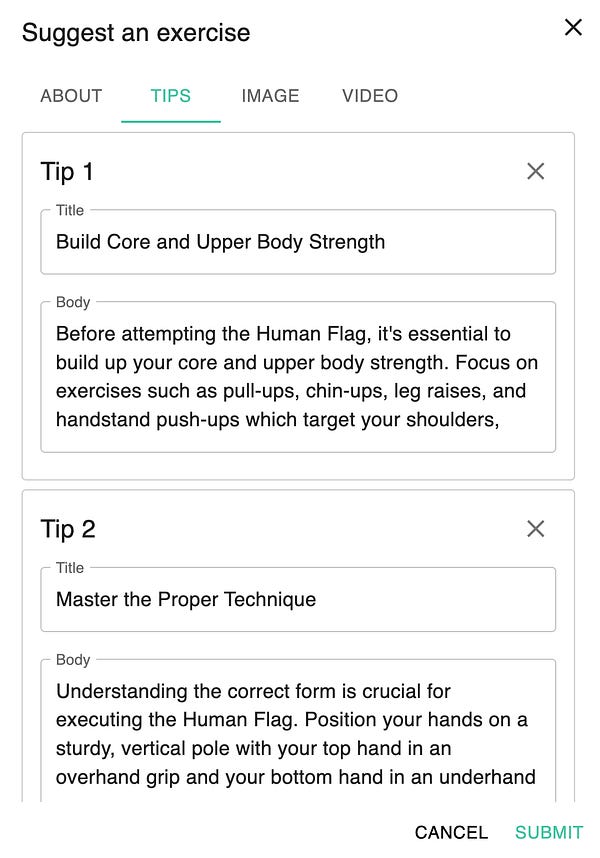

Fields Grouping in Tabs

The “Suggest Exercise” form has way too many fields at this point. ChatGPT generated the skeleton code for me based on this request. I took the skeleton code and plugged it into the file accordingly.

Here is the latest code for ExerciseSuggestion.

<all the code>

I want to add tabs at the top that would include different set of fields as follows:

- About, which includes Exercise name, category, subcategory and How to Instructions.

- Media, which includes the image url/search and the video url

- Tips, which includes the tips, defaulting to one entry in the tips array on initialization

I now have all the fields grouped. First tab looks good.

Second tab for the Media, not as much. Also, image search regressed. Not sure if that happened now or earlier with component refactoring. I’ll get to it later.

The tips dialog should have at least one item already pre-created by default.

I also want both the Media and the Tips tabs to retain the same width as the About tab.

Coding Without ChatGPT

March 31st 💬 🫶: I am now on the airplane, with no internet connection. To be honest, I feel some inner friction continuing, just given how much faster I could be going if I was working with ChatGPT.

I’ll push through just to fix those last issues I’ve discovered in the following order.

Fix the layout in the Media tab

Fix the issue with image search not working. I think this is due to the lack of internet connection, so skipping for now.

Have a default tip created

Fix the width of the dialog no matter which tab is selected

Those were such quick fixes, and I don’t think it would have been that much faster doing it via ChatGPT.

Intensity Level

I’d prefer a more consistent and user friendly experience for the intensity level. Currently, it’s a number field that can be changed with a spinner.

I’d like to use instead the existing “Intensity Level” component that shows up when you open an exercise details.

To do that, I replaced the <TextField> with <IntensityLevel> and plugged in the IntensityLevel an onChange event handler that lets it notify the parent component of the changed intensity level.

March 31st 🫶: I managed to get the above done before I was asked to stow away my laptop for takeoff. However, for the rest of the flight, I tried to get some sleep but unfortunately, I was unsuccessful.

Image Experience — Continued

April 2nd 💬 🫶: Alright, I am in Lisbon, Portugal right now, sitting on the balcony. Last night and this morning, we went out and explored the neighborhood. I will work for about an hour then go to a local market.

Looking at the current experience:

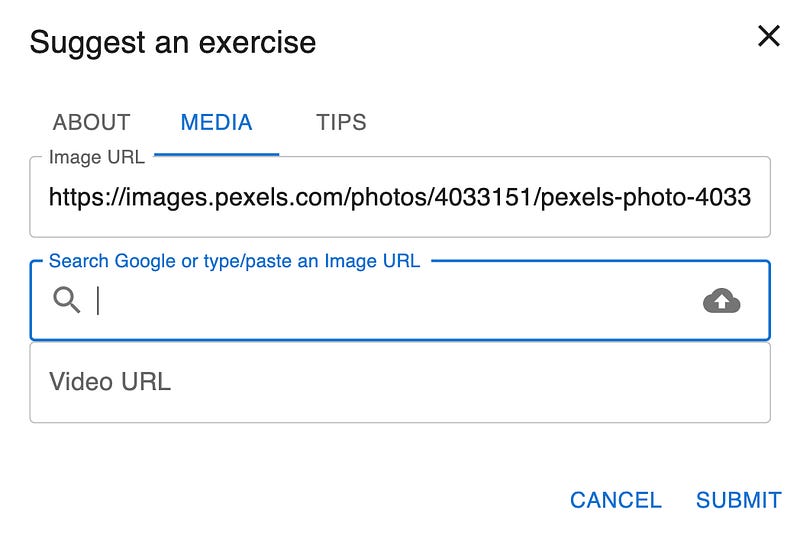

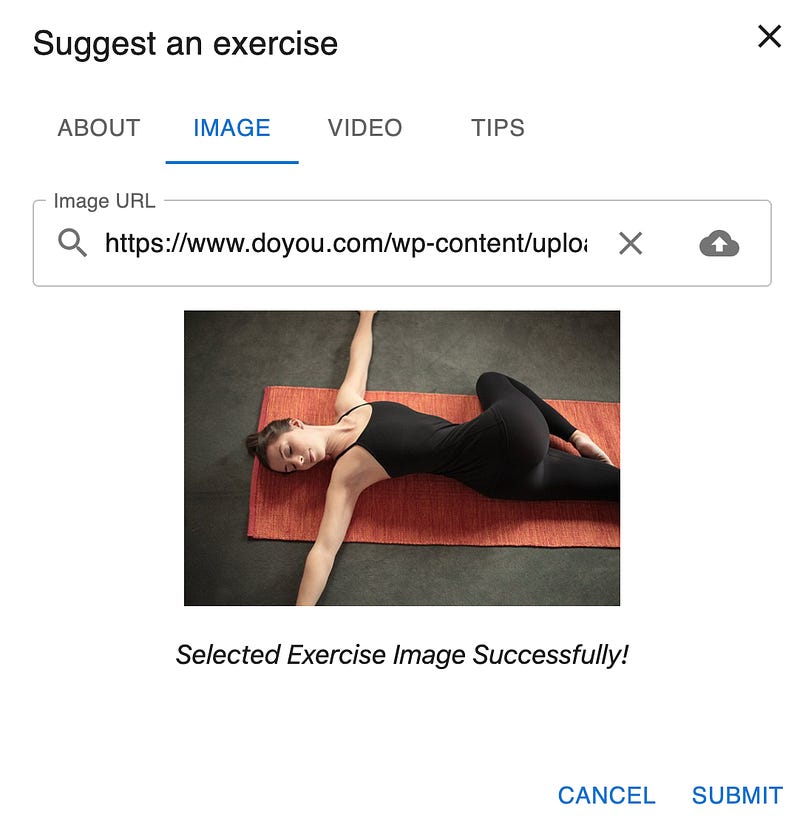

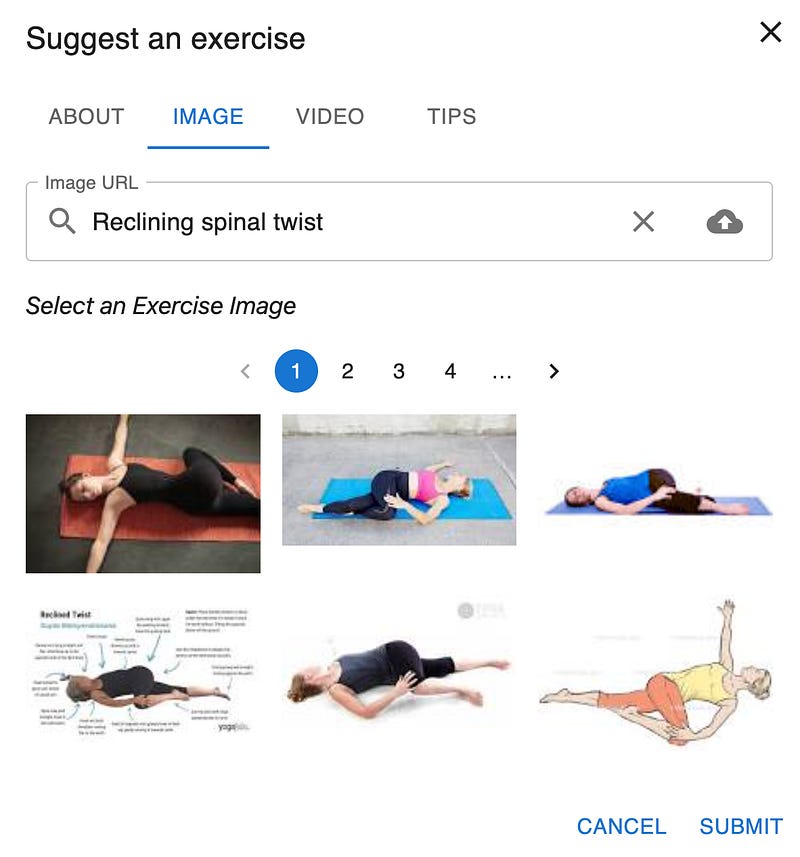

For the image url and search experience in the Media tab, I would like to make the following changes:

- Combine the “Image Url” text field and the “Search Images” text field into one Text Field with placeholder text “Search Google or type/paste an Image URL”.

- Show a search icon in the “Search Images” field

- Include a button on the right side of the text field that lets a user upload an image. When the image is selected, it is uploaded to Firebase storage, with a field name that matches the exercise name, with spaces replaced by underscore characters. The text field is updated with the new image url, and the image is previewed in the space below the text field.

- When there is text in the field, show a “clear” button. Add a separator between the clear button and the image upload button to its right.

- When the user enters a valid url, validate that the url points to an image. If it does, preview the image in the space below the text field. If it is a url that does not point to an image, show an error message. Otherwise, if it is not a Url, search Google (instead of Pexel) based on the text in the text field. The user should see a gallery of images corresponding to the search result. The user should be able to navigate the image pages. When the user selects an image, hide the image gallery, update the text field with the Url, and preview the image in the space below the text field.

I told ChatGPT to give me the code for the above given the current code I have for the ImageSearch.tsx component.

It generated the code with the following instructions:

This updated version of ImageSearch.tsx includes the combined TextField with the search icon, clear button, and image upload button. It also adds the image preview below the TextField for the selected image. Finally, it retains the original functionality of displaying search results in a Grid.

Make sure to replace the `searchImages` function with a call to the Google Custom Search API instead of the Pexels API. Also, replace `’Your_Exercise_Name’` with the actual exercise name in the `handleImageUpload` function.

After plugging in the code and fixing how Firebase storage is being imported and used, here is the updated UI I see.

Few things work:

Search icon and clear button added.

A preview image is added when you select and image from the gallery

When the clear button is clicked, it clears the text, the preview image and the gallery

File upload button is added and lets you selected a file to upload

Few things did not change or do not work as expected:

After uploading a file, nothing happens. This is likely an error I need to resolve.

Image search is still going through Pexels.

No page navigation added in image search gallery

The Image URL field is still there along with the URL/Search Chips

Part of this is not ChatGPT’s fault, since I only gave it the code of the ImageSearch component, while the Image URL and Chips actually live in the parent Component.

Merging Search Keyword and Url for Image

I provided the code for parent component and asked ChatGPT:

I want to remove the URL and Search Chips, and merge the Image URL field with the ImageSearch component as described earlier.

Selecting an image now updates the Image URL field.

My intention was to combine the Image URL field and the Search field into one. I’ll reiterate that to ChatGPT.

Combine the Image URL and Search fields into one. Name the new field “Image URL” and move the current field title as placeholder text “Search Google or type an Image URL”.

Also, apply the following requirements I mentioned earlier:

When the user enters a valid url, validate that the url points to an image. If it does, preview the image in the space below the text field.

If it is a url that does not point to an image, show an error message. Otherwise, if it is not a Url, search Google (instead of Pexel) based on the text in the text field. The user should see a gallery of images corresponding to the search result.

The user should be able to navigate the image pages.

When the user selects an image, hide the image gallery, update the text field with the Url, and preview the image in the space below the text field.

It generated code but not taking into consideration the latest changes I’ve applied to ImageSearch and its parent, so I’ve asked it to repeat with the latest code provided.

With the new generated code, I fixed a bug, and also passed the exerciseName from the parent to the ImageSearch component. Here is the value that ChatGPT added:

Added validation for url and image url. Added Error Message for when Url is not a valid image

Called Google APIs instead of Pexels with placeholders for API_KEY and SEARCH_ENGINE_ID

Seemed to merge the two fields

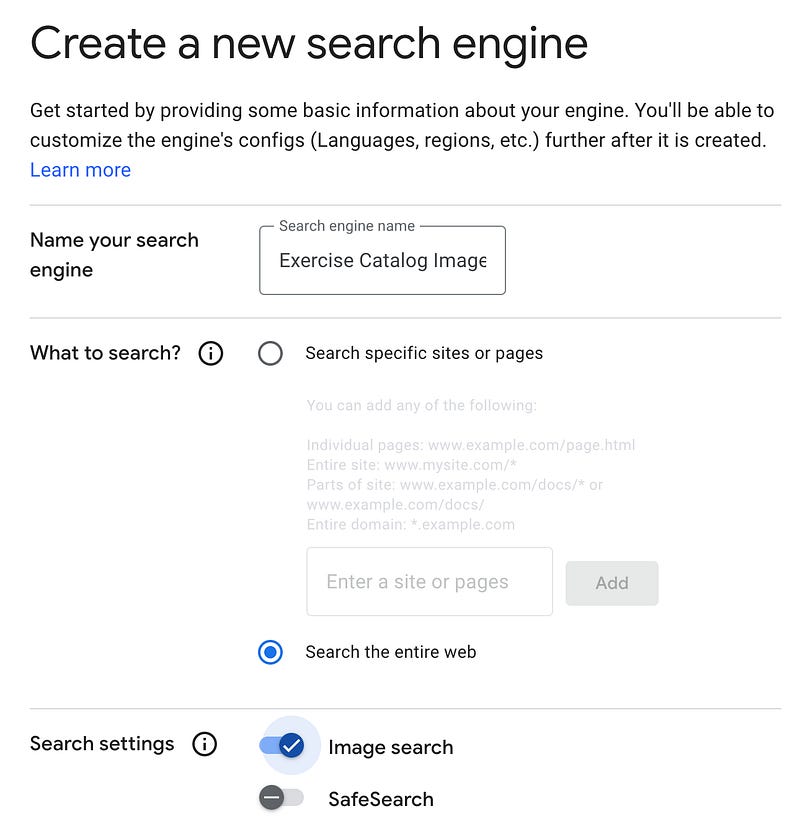

Google Image Search via API

It pointed out how to get the API_KEY, which was easy to generate. I asked it how to get SEARCH_ENGINE_ID, and it gave me the link, which I followed to create the “engine” to search images. On creation, it gave me the ID which I copied and pasted into the placeholder in my code.

Now that I’ve done that, Google Search works!

However, It seems to have lost the behavior to hide the gallery when an image is selected.

Image Url Preview

Pasting a link in the search box magically previews the image, so there is at least also a win on that front.

Side Note: The above is a picture of Portugal’s Sporting Club taken at some point in time. I quickly recognized player number 8, Bruno Fernandes. He joined Manchester United in January 2020 and has since become an integral part of the team’s midfield. Before joining Manchester United, Fernandes had a successful career at Sporting Club, where he won the Primeira Liga and the Taça de Portugal in the 2018–19 season.

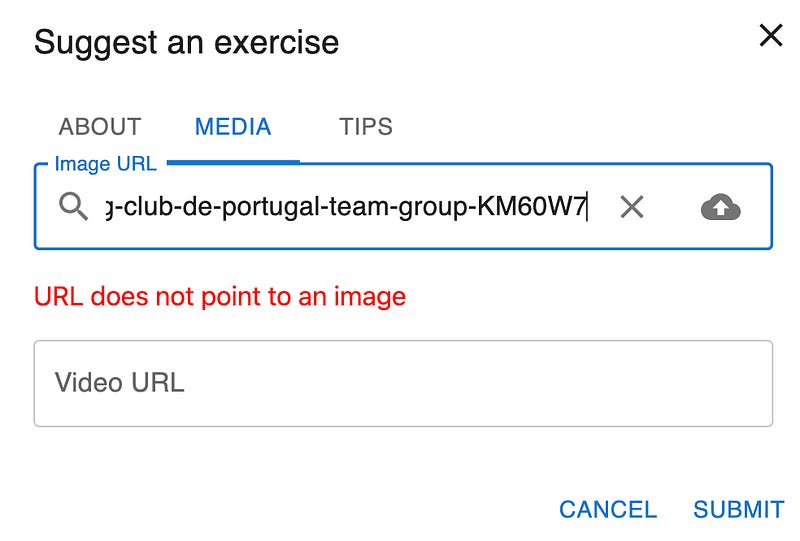

Url Image Validation

Another win is showing an error message when something looks like a URL but is not a valid image url.

Hide Gallery on Image Selection

I asked ChatGPT to hide the gallery when an image is selected. It introduced a showGallery state that I plugged into the code.

Improved Image Validation

When I select an image though, it says it’s invalid. I told ChatGPT that <copied url> is a valid image url but I am getting a validation error on it. I got the answer below and I updated the code accordingly using the original function name.

Apologies for the confusion earlier. It seems that the isImageUrl function is unable to validate the image URL you provided. To fix this issue, you can use a more robust approach to determine if the URL points to an image. You can do this by fetching the image and checking the content type of the response.

Here’s the updated validateImageUrl function using this approach.

Multiple Searches

Another bug I found was, when an image is selected, and I clear the search box and then try to search, the behavior of “searching” seems to be no longer active. I told that to ChatGPT and it gave me the fix described below. The fix didn’t create the desired behavior, so I looked at the code and made the appropriate fix. Following ChatGPT blindly in this case would have created more problems than solved.

You’re right. The issue occurs because the gallery is not shown again when you start searching after clearing the search box. To fix this, you can update the useEffect hook that triggers the search to also set showGallery to true when the searchText changes

Note: it turns out there was a bug with this code and an unnecessary state (showGallery) being used.

Debouncing

Another thing I do not like about the experience is the fact that the UI refreshes the list of images as you type, which creates a weird flickering effect. As a result, I told ChatGPT to only apply the search when the user pauses typing, and it gave me the debounce code, which I applied successfully.

Pagination

Next, I would like to be able to let the user move between pages of the image gallery.

I gave that to ChatGPT, and it gave me the code including installing the @mui/lab NPM package. Luckily, I knew better to tell ChatGPT that I want to use Pagination from @mui/material not @mui/labs.

I plugged in the code, and I hit the error where variable totalPages does not exist, and when I asked ChatGPT, I got what I wanted.

I now have pages for navigation. However, the max page number is so high, when I click on it, Google seems to ignore my search.

I’m thinking just choose a min between 1000 and total pages, and see if that practically does the job for what I am looking for. Even with that, Google is returning back to me an HTTP 400 when I try to access page 1000. Maybe I should just update the Pagination behavior to just navigate the next page rather than allow navigation to the max page. ChatGPT helped me get that done.

If I search for something, go to page 5, then search for something else, the page is not reset to 1. I fixed that myself by resetting currentPage back to 1 every time the searchText field changes.

Considering Infinite Scrolling

Another issue was the fact the UI jumps back to the top every time I change the page, so I thought I might as well replace pagination with infinite scrolling. I have ChatGPT’s help after all! Here is what it told me to do to transition to infinite scrolling:

To replace the Pagination logic with infinite scrolling, you can use the IntersectionObserver API to detect when the user has scrolled to the bottom of the search results. Here’s how you can modify the ImageSearch component to implement infinite scrolling:

- Remove the Pagination component and related state variables (i.e., currentPage and totalPages).

- Create a new state variable to track the loading state of the search results:

- Create a reference for the element that will trigger the loading of more results when it becomes visible:

- Create a function that will load more results and append them to the current search results:

- Update the searchImages function to return the fetched results instead of setting the state directly:

- Set up an IntersectionObserver in a useEffect hook to observe the loadMoreRef element and trigger the loadMore function when it becomes visible:

- Add a div element as a child of the Grid container with a ref to loadMoreRef. This element will trigger the loading of more results when it becomes visible:

- Optionally, display a loading spinner when more results are being loaded:

The code had a lot of issues, to begin with, it was pulling the same images for every page. I decided to just skip infinite scrolling and display 6 images at a time and show the pagination above the gallery.

April 2nd ~2pm 💬 : Few hours passed, my wife is starting to get frustrated with me stuck on a laptop while we’re on vacation! It’s time to go do some exploration in Lisbon!

Image Url State

April 2nd ~7:30 pm 💬 🫶: Back from another exploration going the other way in the neighborhood.

I’ve discovered that the image url set in the ImageSearch component is not propagating to the parent component, so I fixed that.

I also refactored the code by removing some unnecessary states like showGallery and selectedImage. I think I picked those up from ChatGPT without paying much attention that the code could be written without them.

Again, when the image field was cleared, the imageUrl in the parent was not being reset to empty. Those are some of the details you’re likely to miss when you end up relying too much on ChatGPT.

Move Video Url to its own tab

In preparation to work next on Video, I decided to create a new tab “Video” and move that field there.

Better Image Validation

As I was testing, I noticed that the isImageURL logic that I’m using sometimes thinks an image is valid, even when it doesn’t load on a web page. Ex: https://www.hotelzaza.com/hs-fs/hubfs/ZaZa-HOU-CONCEPT-SUITE-AnAffairToRemember.jpe.

I changed the behavior of validation, but that was not enough. Given that the image validation function is async, and it was being called in useEffect as a synchronous function, this was creating some unexpected behavior. Apparently, ChatGPT missed that. So I defined a new async function within useEffect and managed to find a way to get it to work.

Another situation where relying initially on ChatGPT could cause unexpected issues down the line.

Storing Google API Keys safely

ChatGPT plugged the Google API secrets in code. With its help, I moved it to NextJS environment variables.

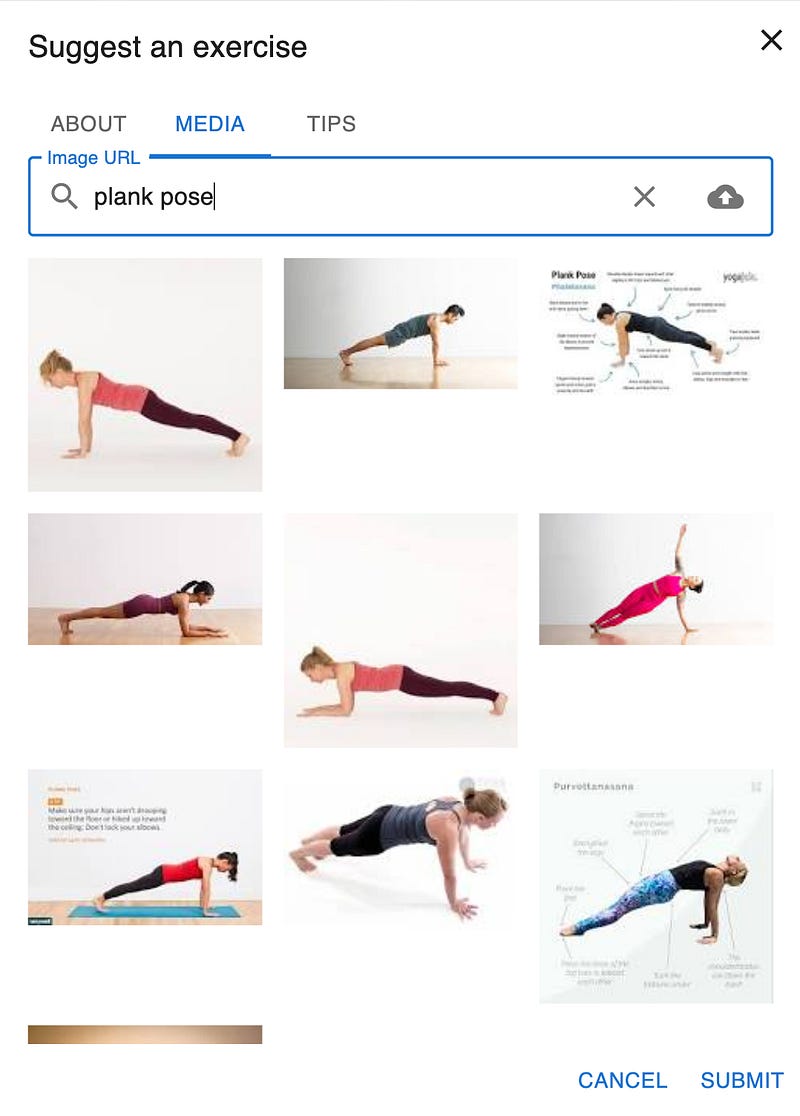

Automatically searching based on the provided exercise name

If the user provides an exercise name and the Image Url isn’t already set, I would like to automatically populate the Image Search field with the exercise name and automatically search for it. Done, and you probably know by now who helped me out.

Knowing when the user should select an image

Next, I’d like to make it easy for the user to know when an image is selected.

And when they search in the gallery, I want them to know that they can select an image from the gallery.

Image Upload Behavior to Firebase storage

The one last very visible experience that’s not working is the ability to upload an image. I fixed that and also showed an error message when upload fails. This time, without ChatGPT’s help.

Google API Safe Search

I also realized I need to turn SafeSearch on, which I did from the Search engine settings I’ve created earlier.

However, even after a few days, I was able to search for unsafe things. I asked ChatGPT.

The Google Custom Search API sometimes may not fully respect the SafeSearch setting of the Custom Search Engine (CSE) configuration. In such cases, you can enforce SafeSearch by adding the safe parameter directly in your API requests.

To enforce SafeSearch in your API requests, set the safe parameter to active:

However, please note that SafeSearch is not perfect, and there might still be some explicit content that slips through the filter. It’s always a good idea to have additional measures in place if content safety is crucial for your application.

Although content safety is not paramount, I still prefer to ensure its protection. Therefore, I took the necessary steps to make the change, and it successfully resolved the issue.

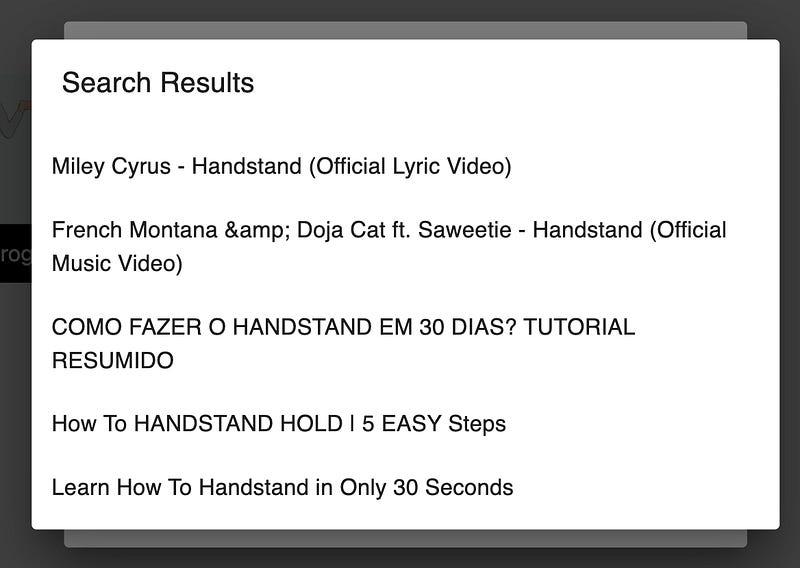

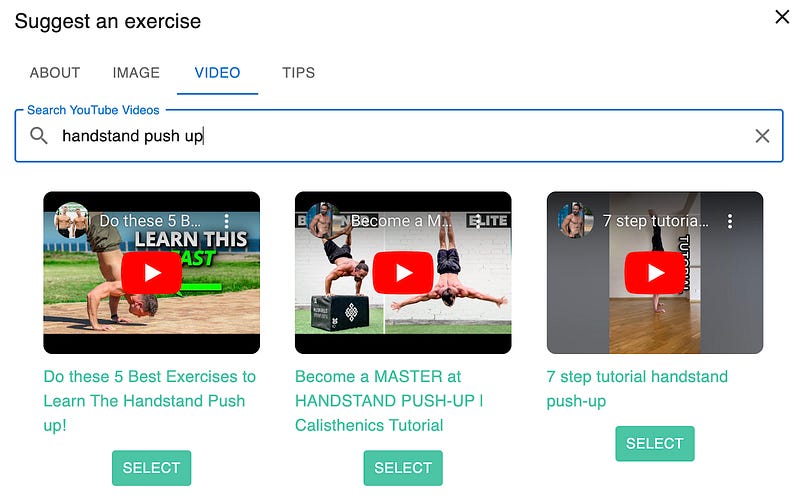

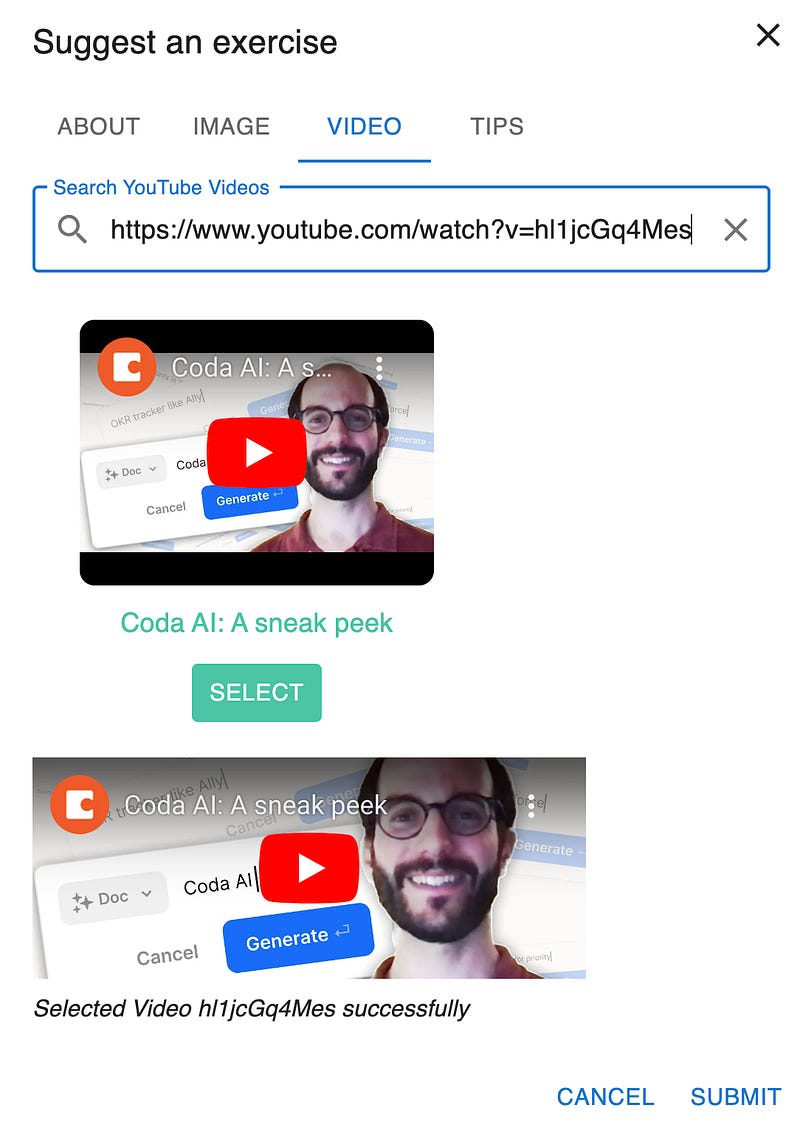

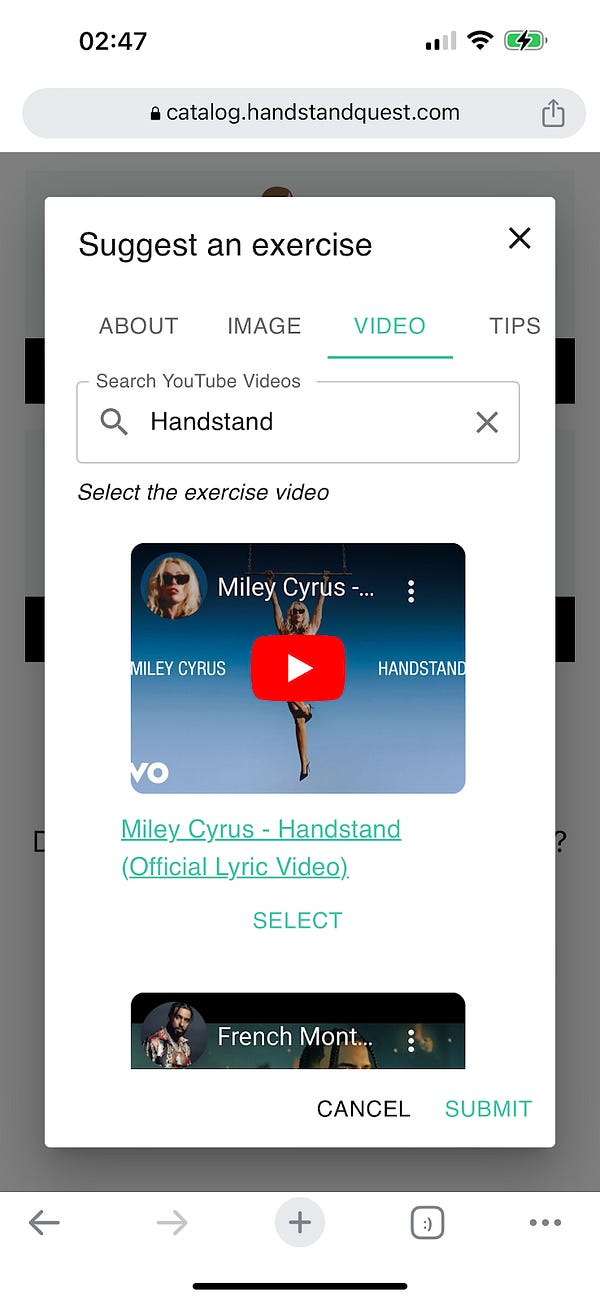

YouTube Video Experience

Who would want to provide a video with this experience? Probably, no one.

I’m not going to enable any file upload capabilities. Instead, if I make it really easy for a user to find a YouTube video, then I’ve achieved my goal.

I can start to imagine what this looks like, but what if I pass it on to ChatGPT and see what it comes up with.

It generated a new component to search YouTube via API. The logic was insecurely done on the client side (exposes API key), so I instructed it to move it to the server side. It generated a new API route in NextJS with the logic to search YouTube for videos given a search keyword. The url being hit in the React component changed from https://www.googleapis.com/youtube/v3/search?part=snippet&q=${query}&type=video&key=${API_KEY} to /api/youtube-search?query=${query}.

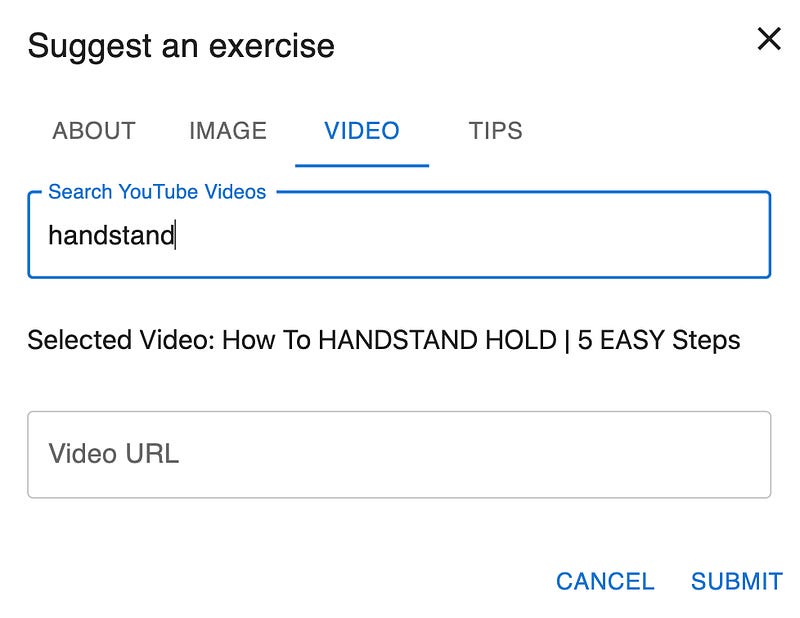

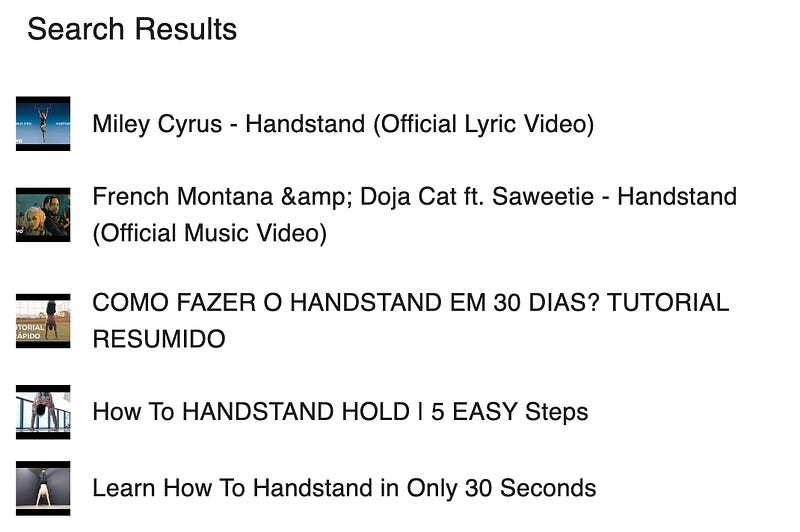

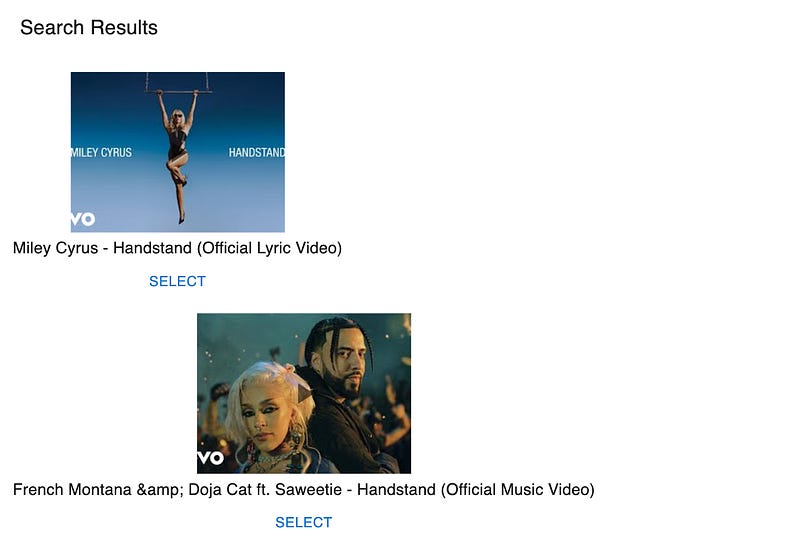

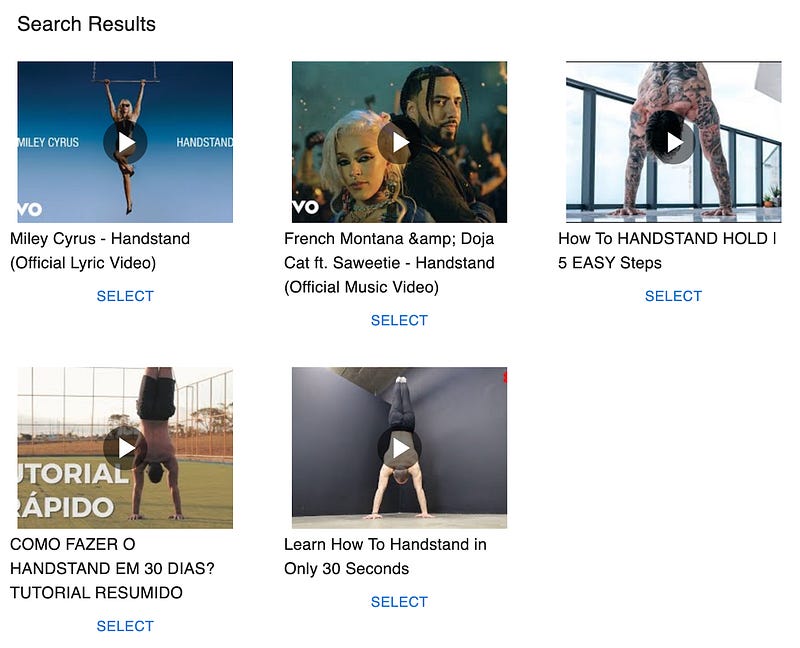

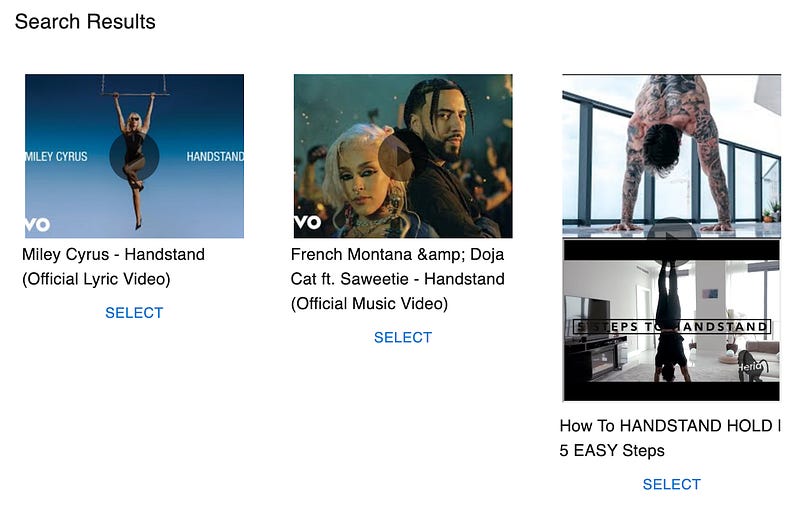

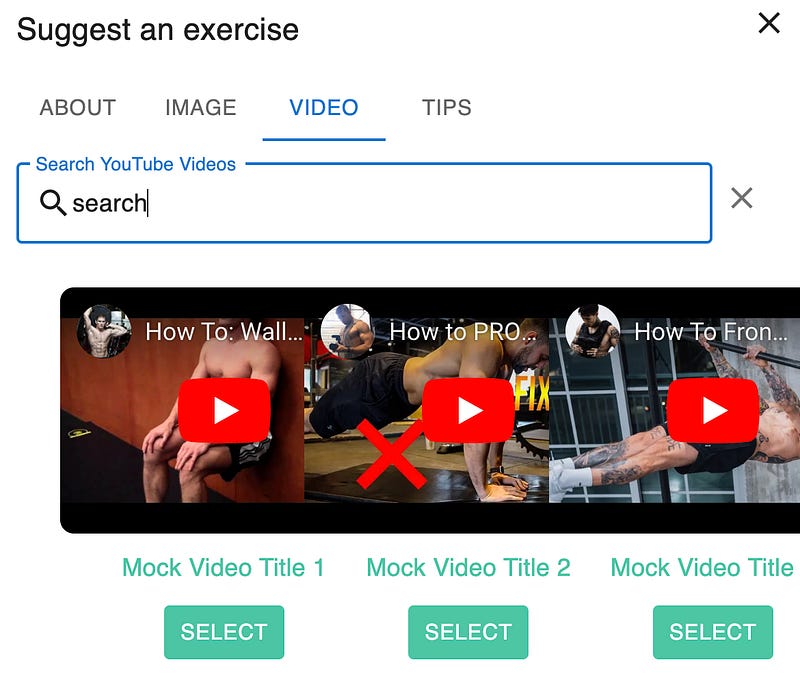

Here are the YouTube search results.

When an item is selected in the list, the video name is passed back from the Dialog back to the Video tab.

I asked GPT to generate thumbnails for the videos.

I want the thumbnails to be larger and have the Youtube play button on them. When clicked, I would like to be able to play the video in a small popup dialog. I would also like to add a “Select” button under each thumbnail to actually enable the selection of the video.

So I asked ChatGPT, and I updated the component after I installed react-player via NPM.

The layout is weird. Clicking on a video opens a dialog with the thumbnail barely visible, and when clicked, it takes you to YouTube in a new tab.

April 2nd ~ 9pm 🫶: I’m out. I need a break from all of this.

April 3rd at 4 pm 👔: Two hour work meeting, coupled with another 1–2 hours of work.

Improving YouTube Video Experience

April 4th 1 am 🫶 💬: After a full day of exploring, drinking, walking and eating in Lisbon, it’s 1 am, and I’m ready to write some code. I mean, have ChatGPT write me some code.

Is my role in this as a programmer, with ChatGPT being the assistant? Or am I more of a Code Plugger?

For that, I also asked ChatGPT.

As for your role in this scenario, when working with ChatGPT, you can think of yourself as a programmer with ChatGPT being your AI assistant. You provide the high-level requirements and guidance, and ChatGPT helps you by generating code snippets or offering suggestions. The term “Code Plugger” isn’t a standard term in the industry, but if you prefer, you could use it to describe your role while working with ChatGPT.

Or a Code Connector?

Here is what ChatGPT has to say about it.

In summary, while “code connector” is not a standard term, it could be interpreted as a concept, tool, or method that helps connect different parts of code, modules, or systems within software development.

Then I asked what if the Code Connector is a human?

If a “code connector” were a human, it would be someone who plays a key role in integrating different parts of a software system or facilitating communication between different software components, teams, or platforms.

Plugger or Connector, it’s time to move on and get productive getting things done!

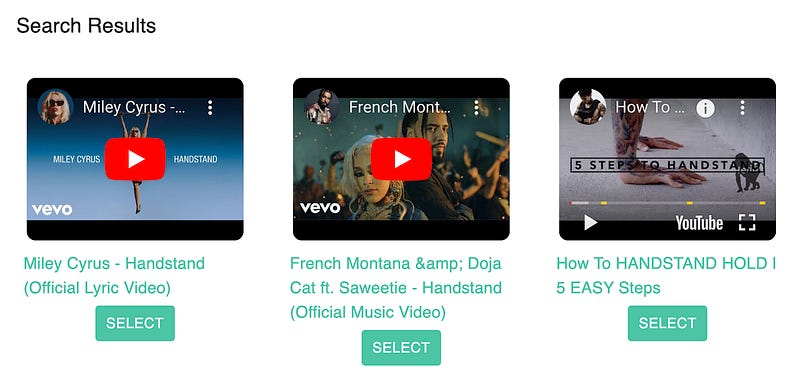

Here are the improvements I would like to make:

- Show the YouTube videos in a grid that is web responsive, it becomes more like a column on a mobile device

- When the video thumbnail is clicked, I would like to play the YouTube video within the thumbnail

- I would like the Play button on the YouTube video to be more visible

Here are the changes ChatGPT recommended when I asked:

Here’s an updated version of your code to improve the user experience as you requested:

- Display YouTube videos in a responsive grid that becomes a column on mobile devices.

- Play the YouTube video within the thumbnail when clicked.

- Make the play button more visible.To achieve this, I’ve made the following changes:

- Imported Grid and GridList from @mui/material.

- Created a new CSS file named YouTubeSearchBox.module.css to style the components.

- Modified the ListItem component to use Grid and GridList for responsiveness.

- Updated the Play button to be more visible with a background color.

There were some bugs. For example, ChatGPT imported GridList which does not exist in @mui/material, but it was able to fix that on prompting.

Playing the video still shows me this weird behavior.

Some additional things I would like to change:

- On clicking the button to play the video, I want the video to play within the thumbnail. It might be better to just iframe the YouTube video rather than using react-player/youtube.

- I would like the SELECT buttons to be horizontally centered.

ChatGPT told me:

I’ve made the requested changes:

- Replaced react-player/youtube with an iframe to play the video within the thumbnail.

- Horizontally centered the “Select” buttons.

The result is a mess. I’ve decided to do it myself at this point. I fixed the issue below.

Next, I want the YouTube title to be in a smaller font, and to use the <Link> NexTJS component to open the YouTube video in a new tab. I asked ChatGPT and I got something with an error, which it then fixed on prompting.

I then requested ChatGPT to make the SELECT an animated button and place all the SELECT buttons in the Grid on the same line. It went too fancy, importing @mui/system component. I tried again: Improve the look & feel of the SELECT button (add background) and make them all show on the same line. The UI looked malformed, so I made some edits and ended up with this.

Shortly after, I stopped seeing any YouTube results. I think the API is probably blocking me after all the requests I’ve made. As a quick way to investigate, I asked ChatGPT to update my NextJS API to generate mock videos if I pass a “mock” parameter to it.

Ok, this proved there is something off with the YouTube API, but I’ll leave dealing with it to another time.

Searching In Place

Right now, the video lookup behavior is weird. It was all ChatGPT’s touch.

Instead of opening a dialog when a user clicks on the “Search YouTube Videos” text box, I would to like to

Show a search icon in the “Search YouTube Videos” field

As the user starts typing, with debouncing, and instead of opening a separate dialog, show the Grid of YouTube videos directly under the search box.

When a user clicks the “SELECT” button:

Update the “Search YouTube Videos” field with the URL of the YouTube Video

hide the YouTube grid

Show the thumbnail (75% width) of the selected video

Show italic text under the video that says: Selected Video “video Id” successfully, where “video Id” is the youTube id of the video

When there is text in the field, show a “clear” button. When clicked, it would clear the text in the field and resets the search.

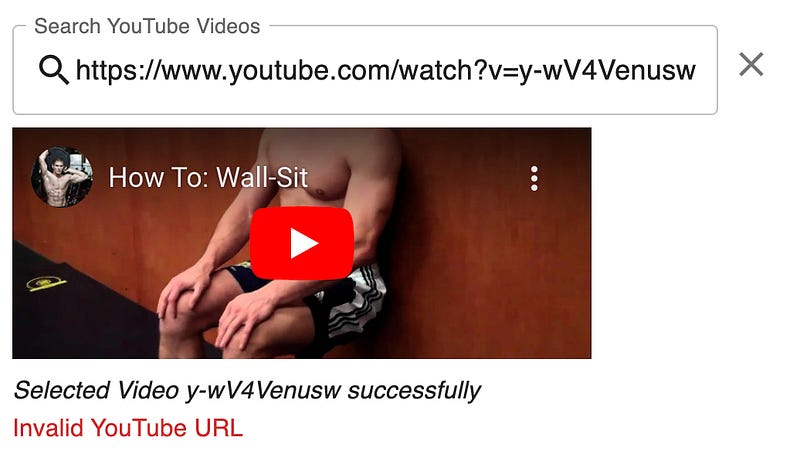

When the user enters a valid url, validate that the url points to a youtube video. If it does, preview the Youtube video in the space below the text field. If it is a url that does not point to a Youtube video, show an error message.

The user should be able to navigate the Youtube grid in pages.

First, I provided ChatGPT with the latest code of ExerciseSuggestion, and then requested the changes above. The change was a little bit of a shit show with a lot of my “hard-earned” code that I previously wrote removed. It also was not allowing me to paste the whole code of YouTubeSearchBox.tsx so it can make the suggestions based on what’s already there. For that reason, I’ve decided to feed ChatGPT the changes I need one by one.

I tried again, and I was able to paste the code, and here is what it said:

Based on your latest code, I have made the required changes to implement the features you requested:

- Show a search icon in the “Search YouTube Videos” field

- Show the Grid of YouTube videos directly under the search box with debouncing

- Update the “Search YouTube Videos” field with the URL of the YouTube Video upon selection

- Hide the YouTube grid and show the thumbnail of the selected video

- Show italic text under the video with the selected video ID

- Add a “clear” button when there is text in the field

- Validate the URL and preview the YouTube video when a valid URL is entered

- Show an error message when the URL does not point to a YouTube video

- Add pagination for the YouTube grid

Here is the experience.

When I click “Select”, some of the capabilities are there.

Also, there are bugs where once I search, additional searches do not work. In fact, I have a good amount of tweaking to do, and I am tired.

April 4th 💬: I’ve spent 2 hours since 1 am working on this. I’m starting to wonder whether this session was productive at all.

Could I have done better if I did this without ChatGPT’s help? Maybe if I were more proficient in TypeScript, React and NextJS?

YouTube Search Experience

April 4th 💬: I woke up a little earlier than expected. It’s 10:30 am now.

The first thing I want to fix is the layout. I tried a few prompts with ChatGPT but I did not get what I wanted. However, I got the idea of changing the Dialog maxWidth to “md”, and that seems to resolve the issue.

Next, I moved the Clear “X” button inside the Search box. I also added a little bit of space after the Search icon. I got the gallery to disappear when the Clear button is clicked. Those were very quick changes; it would have probably taken more time asking ChatGPT for help.

Next issue I have is whenever I change the Search term, the list of videos do not refresh. Only after clearing the Search box will the actual searching become effective. I removed the lodash debouncing and created a custom useDebounce hook and that seems to do the magic. The best way to validate is to remove the “mock” videos and try the search on real YouTube videos. Alright, it looks like it worked out!

One other bug I found, if I paste a youTube video url in the search box, I get both the gallery and selected video to be shown.

I went ahead and fixed this bug and other state-related bugs without leaning on ChatGPT.

Auto-Search Based on Exercise Name

If the user goes to the video tab, and the search box is empty, I would like it to get automatically populated with the “Exercise Name” set in the About Tab. This is similar to the behavior I built in the image tab, so I just looked up the logic from there rather than relying on ChatGPT.

YouTube API Rate Limiting

I am calling the YouTube Data API from my backend, so I’m concerned that I will be blocked if there is enough usage of the feature. I asked ChatGPT how many searches I can do per day:

When using the YouTube Data API’s search functionality, each search request consumes 100 units of quota from your daily allocation.

For example, if you have the default daily quota of 10,000 units, you can make up to 100 search requests in a single day before reaching your limit (10,000 units / 100 units per search request = 100 search requests).

Remember that the quota usage can change over time, so it’s always a good idea to check the YouTube API documentation and your Google Cloud Console for the latest information on quota costs and your project’s quota usage.

It does look like I would easily get to my daily limit, with just 100 searches a day! The path forward is a little complicated, based on this page from Google. I went through a very painful form that Google had me fill to get more quota, let’s hope somebody at Google acknowledges it.

Update from the Future (April 9th): I haven’t heard anything back from Google about increasing the limit.

April 4th 🫶: Now, it’s time to go do other stuff in life. I’m in Portugal after all!

Current Exercise Data Model

April 4th 3:30 pm 💬: Back.

I think now we’re getting to the point of all of this work! When someone requests an exercise, I’d like to make it as easy as possible to plug the suggestion into

https://catalog.handstandquest.com/

and more importantly, into the Handstand Quest iOS App!

For that to be as smooth as possible, when an exercise request is submitted, I want the format of the request to be exactly like the one I have in the iOS app.

Here is a sample JSON used in the app for the handstand exercise. This gets read on app launch and gets serialized into a Swift object when the app starts.

{

"id": "Handstand",

"name": "Handstand",

"category": 3,

"description": "Before starting:\n- make sure you warm up your wrists and stretch your shoulders.\n- be comfortable with bailing out of a handstand (ex: cartwheel)\n\nPlace your hands on the floor, kick with your dominant leg.\n\nMake sure to focus on getting your pelvis stacked over the shoulders and wrist, as your kick up and when you reach up. Make sure your arms are straight and core engaged.\n\nBreathe and know when you need to stop to avoid injuries.",

"tenthSecondRepInterval": 50,

"pageId": 1,

"met": 10.0,

"voiceCommands": [

{

"title": "handstand",

"id": 1

}

],

"tips": [

{

"title": "Form follows function",

"body": "Cambered hands, active shoulders, locked elbows, hips over head, feet over hips… really whatever feels easier to get you to hold the handstand longer."

},

{

"title": "Make it automatic",

"body": "By doing countless handstands, we get from conscious to automatic brain behavior. It starts becoming more like driving, and thus a lot easier to hold."

},

{

"title": "Regaining balance",

"body": "Lose balance => correct the flow => recover. Real progress comes from struggling to correct your balance by squeezing your body and fingers tightly in an attempt to recover."

}

],

"subcategory": "Handstand Variations",

"metricType": 0,

"hasDemo": true,

"hasHowTo": true,

"howToYouTube": "KNC5lkoE2Fs"

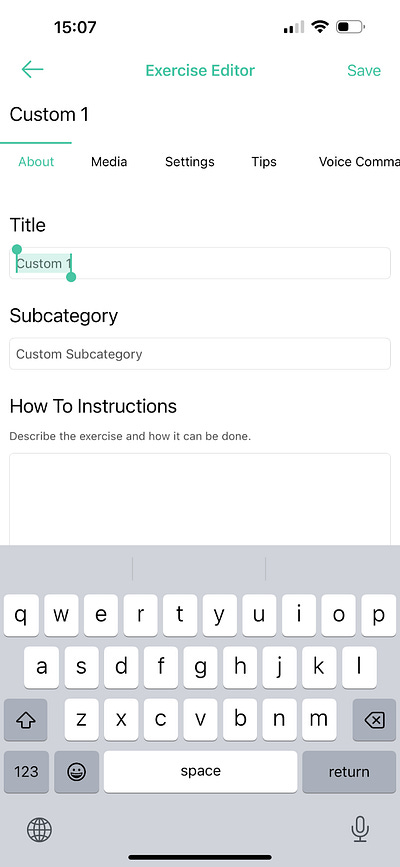

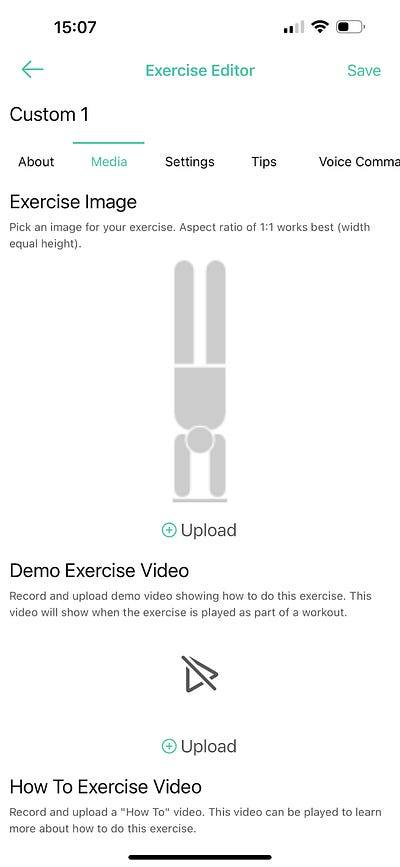

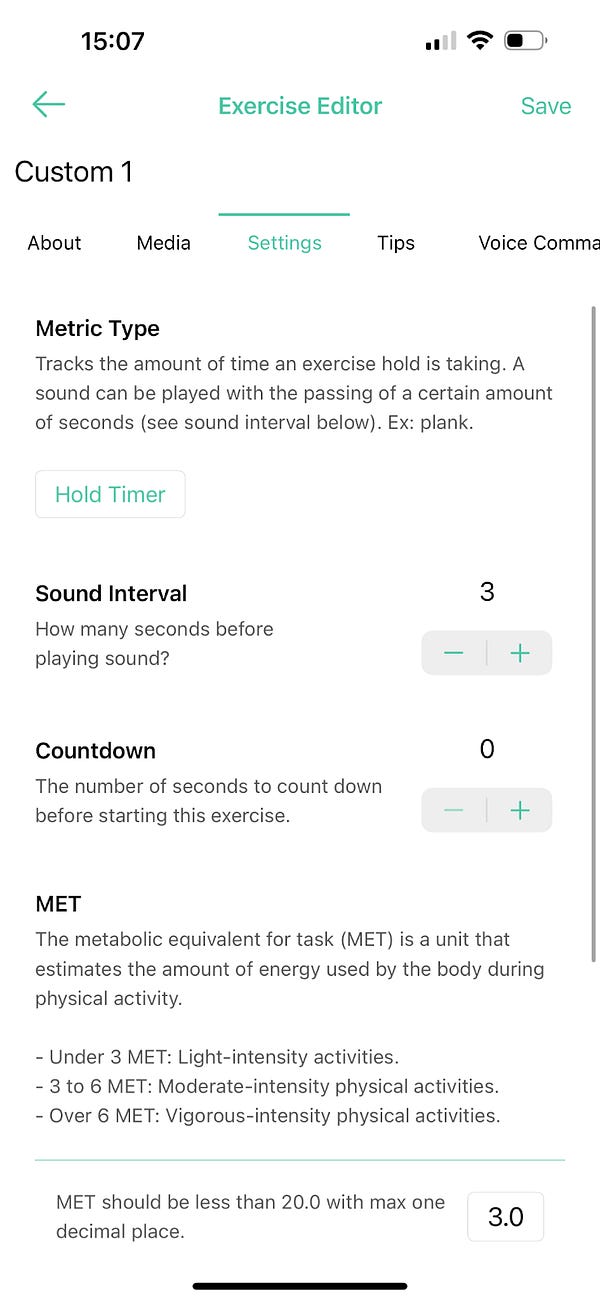

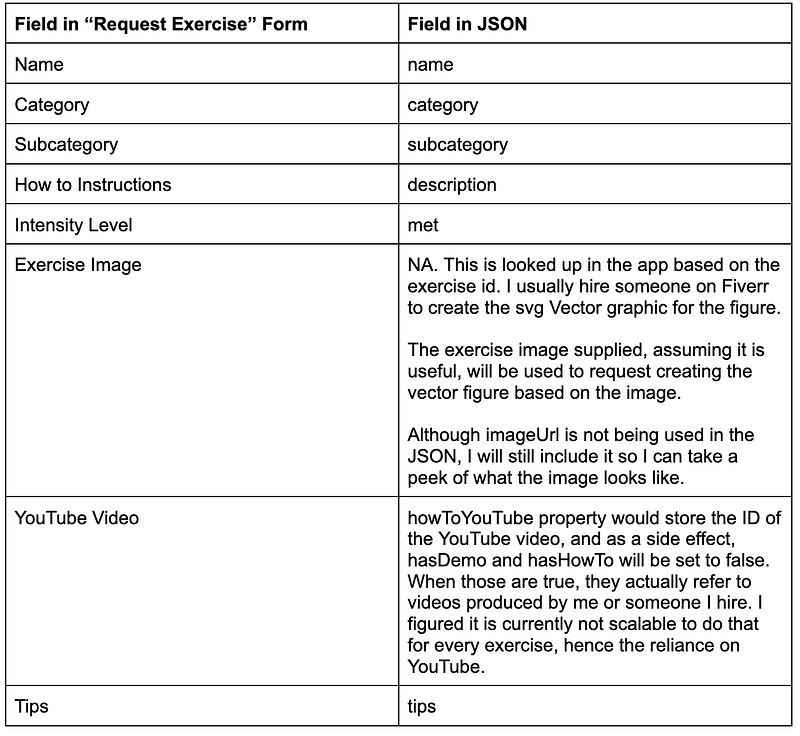

}It would be also worthwhile to share a visual of the current experience I have in the mobile app to edit a builtin exercise or create a custom exercise.

The About, Media and Tips views in the mobile app are very similar to the ones we are building in this Web App.

The below Settings and “Voice Commands” views allow the user to configure their experience in the mobile app. For the most part, they are currently not related to this web app, except for the MET field in settings, which maps to “Intensity Level” in the web app.

Here is how the fields in the “Request Exercise” Form would map to the current JSON format I have in the app.

Fields in JSON but not in the “Request Exercise” Form. Find a way to set the fields behind the scenes when the “Request Exercise” Form is submitted.

Acting on the Exercise Suggestion

For me to most effectively incorporate an exercise suggestion, I need it to be in a JSON format that matches the data model defined earlier. So if I generate the JSON and send it in an email or save it in storage as a JSON file, then I should be able to take action on it.

I would copy the JSON file content into a local JSON file that has the definition of all exercises. This file gets loaded by the mobile app. I then use an Electron app I wrote to generate an MD file from JSON to be loaded statically by this NextJS web app.

Because ChatGPT is capable of converting a JSON file to MD in a specific format, I could generate the MD file too, then either:

Get the MD file pushed to the web app repository

Put the MD file in Google storage or email attachment so I can easily download it

Either way, I will use my internal tool because it is free of cost to me.

Moving on, I’ll do the following: on submitting the exercise request, I will send myself an email with all the details nicely formatted in HTML, and towards the end of the HTML, I’ll include a link to the JSON file stored on Firebase Storage.

April 5th at 8:30 am ⚽: The Washington EPD Boys (born 2009) team played their first game at the Iber Cup, winning 3–0. My kid made an assist. I was relieved since I wasn’t sure how he’ll be able to perform on a team 1 year older (he’s born 2010) and with players he’s never played with before as part of a team.

April 5th at 4 pm 👔: Two hour wok meeting, coupled with another ~1 hour of work. Go get dinner, then spend another ~4 hours writing promotions doc.

Generating the JSON

April 6th at 1 am 💬: Back.

I told ChatGPT to generate the JSON from the request form. I showed it a sample JSON and told it how to map the data as follows:

It did it out of context, so I gave it the React state variables in the file and it generated what I was looking for.

I’ve changed the submit button to be only enabled when an exercise name has been specified. When the user clicks submit, I generate the JSON and print it to the console window for now.

Saving the JSON and getting its Download Url

I would like to store the formatted generated JSON in Firebase storage. I’ve set the following security rules so that anyone can create on the backend but not overwrite an existing file.

match /exercises/json/{ExerciseId}/{DateString} {

allow create, read;

}With little refactoring to fetch exercise Id anytime based on the name, I asked ChatGPT and it generated the code.

Generating the HTML and sending via Email

I already have a mechanism in my system to enable sending email notifications. I leverage the Trigger Email from Firestore Firebase extension. So all I need to do is save documents in that location, and the extension will take care of sending the email.

I asked ChatGPT to generate the HTML from the JSON, then simply transformed that into an EmailMessage object that gets sent to a Firestore document, which ends up triggering the extension to send the email.

The email looks as follows:

Match Web App Colors

I would like to replace all the blue in the MUI library with the Handstand Quest green color.

ChatGPT showed me how to create a custom theme and apply it to my application using the ThemeProvider component.

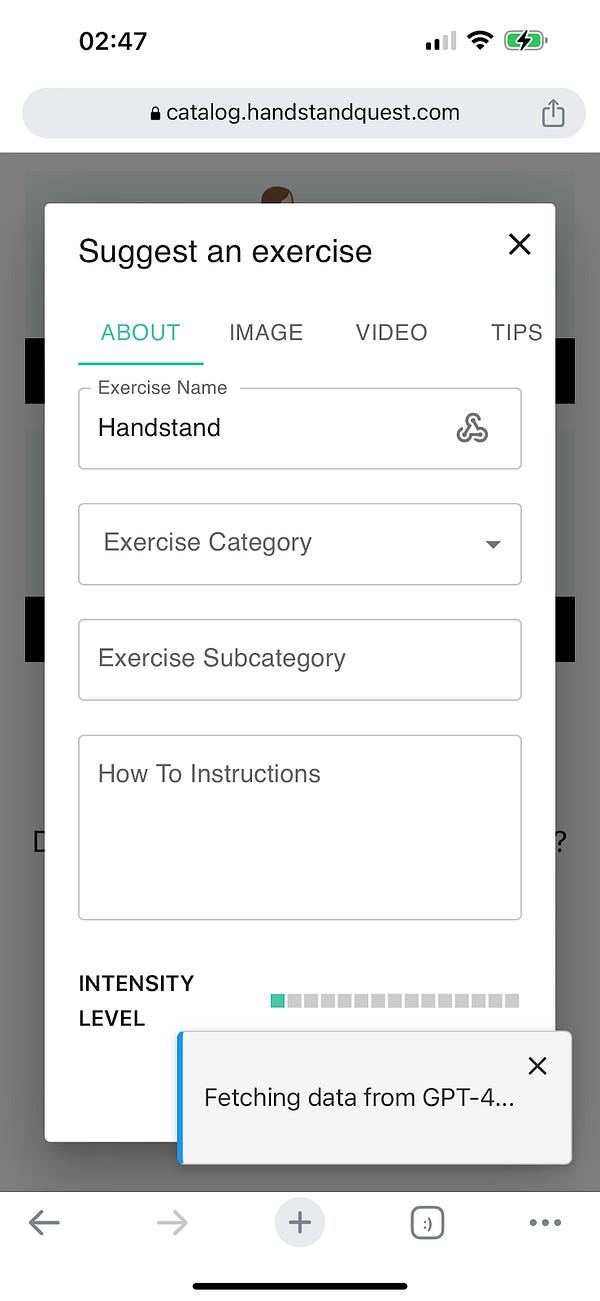

Using GPT API to generate exercise data

Once the user specifies an exercise name, automatically generate all the other exercise data. Users should then be able to update it however they desire and submit.

In addition to removing friction for users when suggesting an exercise, this is a pretty effective mechanism (internal tool) for me to continue to easily build out the exercise catalog over time, with curated quality content mostly generated by GPT.

April 6th 💬: Let’s see how long building this capability would take. It’s 2:30 am. I’ve been actually working on this project for 1.5 hours. The 6 hours before that, working on mid-year reviews and promotion endorsements for my team at work.

Side note: Here is some additional context on what I do. I have a full-time job as a manager, which I genuinely enjoy, particularly when it comes to collaborating with my team. However, I also feel a strong desire to pursue my passions and interests. As an engineer, I find great satisfaction in creating and building my own projects. It’s like a digital form of art or craftsmanship, akin to woodworking. Not only does this provide a sense of fulfillment, but it also has the potential to generate income. So, why not focus on these enjoyable and rewarding pursuits instead of spending time on activities that are less fulfilling and fun?

Plugging in GPT-4 code

Here is what I will instruct ChatGPT to do.

Here is the React code for my exercise request form (just include fields in the About tab)

<code not included for brevity>

- When the user specifies an exercise name, I would like to surface a “Generate” button in the right side of the Exercise Name field

- When the user clicks the “Generate” button, I would like it to do the following:

— Call the GPT 4 API to get the category, subcategory, How To Instructions,

Intensity Level, and automatically update those fields with the response I get back from the GPT 4 API

I got some useful code, some irrelevant, and some of my requests ignored. I guess it is too much to ask.

April 6th 3:30 am 💬: One hour later, I am still getting HTTP 404 and 400 back from the API. I think this replit looks like a reliable source to interact with OpenAI via NextJS App.

April 6th 9:40 am ⚽: The soccer team had a draw with Leão Altivo. We had 77% possession, 10 shots on goal, but we just couldn’t finish. My kid made a really nice shot that the goalkeeper managed to touch and it hit the post. That was the most dangerous threat we had in the game, but unfortunately, no goal!

April 6th at 11 am 💬: Here I am now, in between soccer games, updating the code based on replit. I was able to use the OpenAI library, which is nice. I was also able to call the API directly via Axios. Both approaches though, I was getting back a 429 (Too many requests) from OpenAI.

April 6th at noon ⚽: The soccer team won 7–0. My kid earned a penalty then finished it.

April 6th at 5:50 pm ⚽: another win 8–1.

April 7th at 10:50 am ⚽: we lost 1–5 at the playoffs to a really good team, FC Shakhtar Donetsk. First half, our team was surprised by the level of play and speed of the opposing team. They were clearly at a much higher level than the other 4 teams we’ve played with. First half, they’ve scored 4 goals. Second half, we’ve adjusted to their style of play and got a goal each.

April 7th at 4:30 pm 👔: 1.5 hours of work meetings and about ~0.5 hour of work.

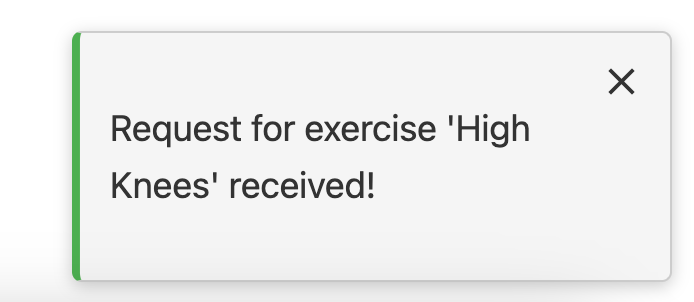

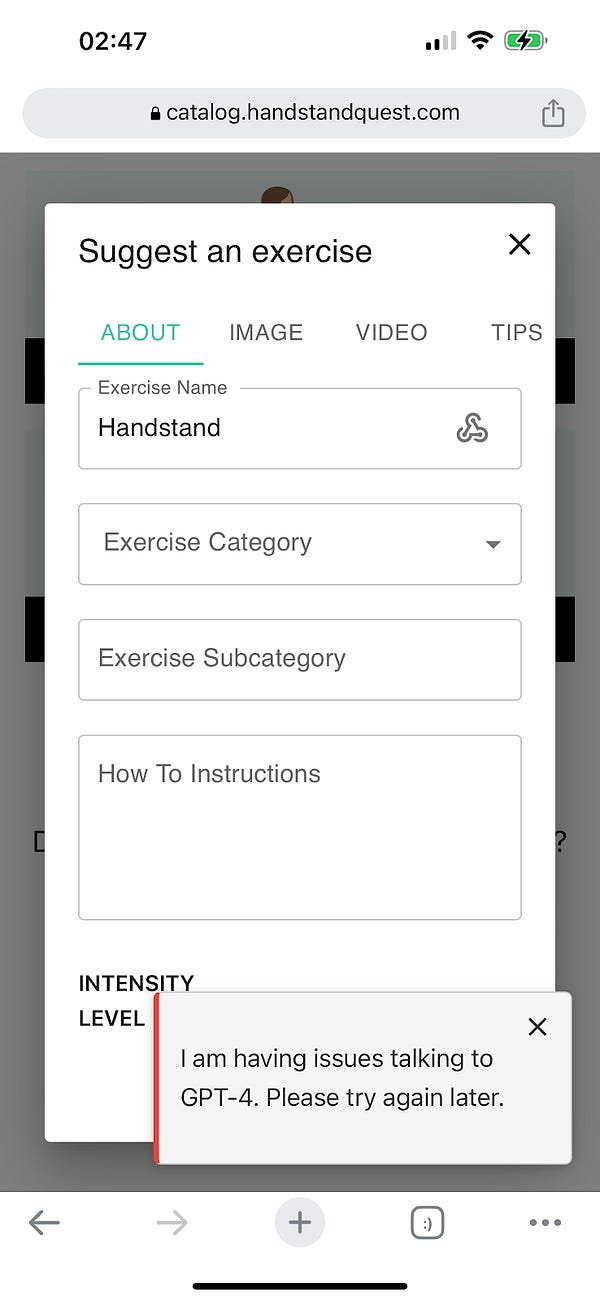

Showing User Notifications

April 7th at midnight 💬: The OpenAI errors seemed like something I cannot control, so I have decided to route my energy into surfacing errors and successes directly in different parts of the UI.

When a failure happens, I’ll see a notification message in the UI when there is an error. ChatGPT helped me generate all the code and allowed me to surface the notification from any child React component.

I’ll also continue to improve the look & feel of the notification UI and leverage it in other places in the web app. For example, when the request for a new exercise is completed successfully.

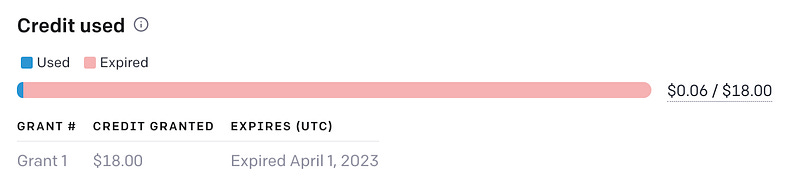

Troubleshooting GPT API

Back to the really important issue, why am I getting errors trying to call the GPT API? I have used other methods to make requests to the OpenAI GPT endpoint, but I am still getting the 429 response. I have decided to reach out to supporting@openai.com and see how it goes from there.

April 7th at 1 am 🫶: I’ll come back and continue building this capability once I stop getting 429 rate limit errors from the GPT API.

April 7th at 7 pm 💬: Wait a second, although I have a ChatGPT monthly subscription, the OpenAI subscription is separate. I think I am getting the failures because I did not add billing details to my OpenAI. I am still blocked because either OpenAI or my bank is not letting me pay from Portugal (or USA VPN that is not close to my zip code).

It looks like my “grant” for usage expired earlier this month.

I found a way to pay by adding another card. It took a few minutes, and now it is working, based on testing in OpenAI Playground!

The GPT Experience

When the user types an exercise in the “Exercise Name” field, a “Generate” button will show up.

When the user clicks on the “Generate” button, a request (prompt) is made to GPT-4 to get the following fields:

Category: Identify a category for the exercise “${exerciseName}”. The response has to be a number between 1 and 5, where Warmup is 1, Progression is 2, Inversion is 3, Strengthen is 4, and Meditation is 5

SubCategory: Identify a subcategory for the exercise “${exerciseName}”. The response should be a string no longer than 20 characters.

Instructions: Fetch the How To instructions for the exercise “${exerciseName}”. The response should be a string between 200 and 500 characters.

Intensity: Identify the intensity level of the exercise “${exerciseName}”. The response has to be a number between 1 and 15, where 1 is easiest and 15 is hardest.

Tips: Generate in JSON format 3 tips for exercise “${exerciseName}” in the format [{ title, body }]

I make the requests sequentially to GPT-4 (because in parallel, I got back HTTP 400's). As each request is made, a loading indicator is shown next to each field. For example, below is a screenshot of the “How To Instructions” being fetched (not showing very clearly in screenshot since it is animated).

I made the requests to GPT-4 sequentially because when I tried to do them in parallel, I received HTTP 400 errors. As each request is made, a loading indicator appears next to the corresponding field. For instance, the screenshot below displays the fetching of the “How To Instructions” (not very clear in the screenshot since it’s an animation).

Then the Tips tab is automatically clicked, a loading indicator is shown until 3 tips are fetched from GPT.

All of this was working great locally, until I pushed the code to production. Given that it takes a little time to talk to GPT-4 to fetch things like an exercise “How To” description, it was timing out in production with error code HTTP 504! And as it turns out, Vercel only lets you change the timeout when you upgrade your plan! For that reason, I’ve decided to move from model “gpt-4” to model “gpt-3.5-turbo”.

Since GPT-3 is faster, the request to the API finished before Vercel timed out the request.

Mobile Responsiveness

I’ve tested the experience on my iPhone and I’m happy with the experience.

Generating Image Data

At some point, I’d like to be able to use the DALL-E API to generate an svg exercise figure based on the selected image in the exercise request.

This way, newly introduced exercises to the catalog require minimal effort to fit into the look & feel of the exercise library.

Conclusion

ChatGPT has significantly boosted my coding effectiveness, helping me save valuable time. By integrating it into the NextJS + React exercise catalog app development process, I was able to generate code and implement user interface enhancements such as the exercise suggestion mechanism quickly.

While ChatGPT was an essential asset in the development process, developers must balance their reliance on AI-generated code with their critical thinking skills. Over-reliance on AI-generated code can lead to reduced understanding of the implemented code and unintended complexity.

I invite you to share your stories, give feedback, or suggest additional features you would like to see in the app. As demonstrated by the exercise catalog app, embracing AI-assisted development tools can lead to impressive results and foster continuous learning. Let’s use these tools responsibly and thoughtfully to enhance our productivity and skills in the ever-evolving world of software development.